goingglobal-session-2-1225-thursday-elt-roger-hawkey-paper

- 1. 1 A Study of the impacts of IELTS, especially on candidates and teachers Dr Roger Hawkey, Consultant in language testing to University of Cambridge ESOL Examinations Introduction University of Cambridge ESOL Examinations has been conducting a study of the impacts of the International English Language Testing System (IELTS) test. The study explores the effects of the test on candidates, on preparation courses and on receiving institutions. This paper describes the structure and methodology of the IELTS impact study and presents selected findings relevant to the context of the British Council Going Global International Education conference, Edinburgh, with a particular focus on matters of interest to international candidates and teachers of English. These include: test fairness and difficulty; test anxiety and motivation; test washback on IELTS preparation course content, methods, materials and satisfaction. The use of the term ‘impact’ in the title of the study acknowledges Hamp-Lyons’ (2000: 586) definition of the term as concerning the “wider influences of tests”, that is matters beyond learning and teaching. Test impact includes ‘washback’, which Hamp-Lyons defines as “influences on teaching, teachers, and learning (including curriculum and materials)”. IELTS Characteristics and Use The IELTS test is owned, developed and delivered through the partnership of the British Council, IDP Education Australia and University of Cambridge ESOL Examinations (IELTS Annual Review, 2003). IELTS is a high-stakes test, used globally by around 500,000 candidates a year to assess their English language ability for study or work in places where English is the language of communication. In 2002, 76% of the candidates taking the IELTS test were seeking an English language qualification for their academic studies, 24% for immigration, training or employment purposes. IELTS assesses the four language macro-skills, listening, reading, writing, speaking, but has no pass/fail scores. The institutions or organisations receiving IELTS candidates themselves decide, according to their perceptions of the language demands of each course or occupation concerned, which of the nine IELTS band scores should be their cut-off point. The IELTS Impact Study Research aim and focus The IELTS impact study is part of a continuous programme of research to ensure that the test is as valid, effective and ethical as possible. IDP Education Australia and the British Council fund jointly agreed research projects on the IELTS test, and Cambridge ESOL undertakes research projects including the impact study which is the subject of this paper. The study seeks information in response to research questions on: characteristics and attitudes of IELTS candidates the views of English language teachers preparing these candidates for the test methods and materials used on preparation programmes receiving institution administrator experiences with IELTS. Research approach, structure, validation As in all research projects, methodological choices had to be made. Figure 1 (Lazaraton, 2001 after Larsen Freeman and Long, 1991) usefully represents quantitative vs qualitative research approaches as continua of by no means mutually exclusive method characteristics.

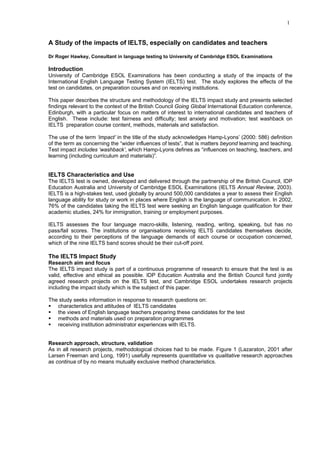

- 2. 2 Figure 1: Continua of research approaches Quantitative Research Qualitative Research controlled ----------------- -----------------*-- naturalistic experimental ---------------- ------------------*-- observational objective ---------------- *------------------- subjective inferential ---------------- --*----------------- descriptive outcome-oriented ---------*------- -------------------- process-oriented reliable ----------------- ------------------- valid particularistic ----------------- --*---------------- holistic ‘hard’, ‘replicable’ data ---------------- -*----------------- ‘real’, ‘rich’, ‘deep’ data As will become clear below, the IELTS impact study used both qualitative and quantitative research approaches, and from various points along the continua in Figure 1. The asterisk (*) signs on the chart suggest approximately how the approaches of the IELTS impact study might be categorised (no asterisk appears on the reliable – valid continuum as the two do not appear to the writer to be conceptually or practically opposed, reliability being, rather, part of validity). The IELTS impact study was structured in three phases. 1. In Phase One of the study, Cambridge ESOL Examinations commissioned Professor Charles Alderson and his research team at Lancaster University (see references below) to develop data collection instruments and pilot them on small groups of participants locally. 2. In Phase Two, these instruments were trialled on larger international participant groups similar to the target populations for the study. The trial data were analysed for instrument validation, revision and rationalisation by Cambridge ESOL Research and Validation Group staff and outside consultants (see, for example Gardiner, 1999, Kunnan, 1999, Milanovic and Saville, 1996: Purpura, 1996 and Hawkey, 2004) . 3. Phase Three of the IELTS impact study saw the administration and analysis of the revised data collection instruments. In a paper commissioned as part of the IELTS impact study, How might impact study instruments be validated? (Alderson and Banerjee, 1996), the writers express some surprise at the apparent lack of “a well-developed literature on how the reliability and validity“ of “questionnaires, interviews, surveys and classroom observation schedules” should be established. The IELTS impact study sought to ensure valid data collection instrumentation, and useful research lessons were learnt during this process about the differences between the validation of data collection instruments and the validation of tests. In data collection instruments, the veracity rather than accuracy or appropriacy of what participants say or write is the key aim. However, since responses to questionnaire items may not always, for a variety of reasons, reflect what participants actually think or feel, checks are made, where feasible, through related items or through triangulation, that is the use of more than one method or data source to investigate a particular phenomenon. A further distinction between test and data instruments is that since tests may include several items on the same construct or trait, whereas questionnaires may ask a question only once, some test item analysis operations may not be not appropriate for the validation of questionnaires. During Phase 2 of the study the IEL:TS impact study data collection instruments were subjected, where appropriate, to a range of validating measures, including: descriptive analyses (mean, standard deviation, skew, kurtosis, frequency); factor analysis; test-retest reliability analysis; convergent – divergent and multi-trait, multi-method validation; brainstorming, expert opinion and review. Research instrumentation and participation Phase Three of the IELTS impact study saw the administration of the following revised data collection instruments: a modular IELTS test-taker questionnaire seeking information on background; language learning and testing experience, strategies, attitudes; preparation courses a teacher questionnaire, covering background, views on IELTS, experience of IELTS-preparation programmes an instrument for the evaluation of books used to prepare students for IELTS an IELTS-preparation lesson observation analysis instrument a receiving institute IELTS administrator questionnaire.

- 3. 3 A total of 572 IELTS candidates from all world regions prominent in the actual IELTS test-taker population (see Figure 2) completed the IELTS impact study student questionnaires in 2002. There was a reasonable match between the two populations, though with imbalances in South Asia and Western Europe candidate representation. Figure 2: Comparison of IELTS impact study and IELTS candidate regions World Region Impact study % IELTS 2002 % (CSA) Central and Southern Africa 2 2 (EAP) East Asia Pacific 73 61 Central and Eastern Europe 3 3 Central and South America 1 1 Middle East and North Africa 1 4 North America and Mexico 1 1 South Asia 8 21 Western Europe 8 4 Total 97 97 Figure 3 presents a summary profile of the impact study candidate participants. Figure 3: Profile of IELTS impact study candidate participants Gender: % Female 55% male 45% Age: 15-20 35% 21-25 37% 26-30 16% 31-35 6% 36-40 4% 41-45 1% 46-50 0.3% First languages 35 Countries of origin 28 Fields Business, commerce, finance, marketing 30% Health, social sciences, law 26% Science, Engineering 14% Education, language 13% Computer science, IT 10% Art, design 7% Years of English: kindergarten to college/university 10% primary school to college/university 16% secondary school to college/university 38% kindergarten to secondary 3% kindergarten only 1% primary only 6% primary + secondary only 4% secondary only 16% college/ university only 4% outside classes only 2% with extra language classes? 56% Educational levels* pre-university 6% undergraduate 46% post-graduate 48% Pre-IELTS 64% Post-IELTS 36% IELTS Module taken* academic 89% general training 11% * Only post IELTS candidates asked The gender balance reverses the actual IELTS current male : female percentages, but ages, socio- linguistic histories, fields of study and educational levels reflected the overall IELTS population quite

- 4. 4 well. The IELTS test band scores of impact study candidates who had taken the test were close to the global averages. The focus of the study was mainly on IELTS Academic rather than General Training candidates. Eight-three teachers, mainly teaching English on the preparation courses attended by the student participants, completed the impact study teacher questionnaire. Forty-three teachers completed the textbook evaluation instrument. To enhance and triangulate questionnaire data from students and teacher participants, 120 students, 21 teachers and 15 receiving institution administrators participated in face-to-face interviews and focus groups as part of the study. In addition, 12 IELTS preparation lessons were observed, video-ed and analysed using the impact study classroom observation and analysis instrument. Selected IELTS impact study findings The findings cited here are selected for their potential interest to IELTS preparation teachers and for their relevance to British Council Going Global conference themes. Perceptions of test fairness and difficulties IIS candidate participants who had already taken IELTS were asked whether they thought IELTS a fair way to test their proficiency in English. Figure 4 summarises the responses (of the 190 test-takers concerned) to this question and to the follow-up question “If no, why not?” Figure 4: IELTS takers’ perceptions of the fairness of the test Do you think IELTS is a fair way to test your proficiency in English? (N=190) YES 72% NO 28% If No, why not? 1 opposition to all tests 2 pressure, especially of time 3 topics 4 rating of writing and speaking 5 no grammar test The 72% : 28% division on perceived test fairness may be considered a rather positive response, especially if, as is useful practice when developing questionnaire items, one attempts to predict the response of test-takers in general to such a question. Seeking a related view from the impact study teachers, it was found that 70% of their students’ actual IELTS overall band score results were perceived as in accordance with their teachers’ expectations. When the IELTS test-takers’ specific objections to the test are pursued, the most frequent is, interestingly, opposition to all tests, followed by time pressure. The next most frequent concerns are more language oriented, worry about test topics, like time pressure, being a recurring theme in IELTS impact study data (see below). Figure 5 gives interesting related findings on factors candidates considered actually affected their IELTS performance, suggesting again that time pressure and topic unfamiliarity are seen as significant problms. Figure 5: Factors affecting IELTS candidate performance (%) Time pressure 40% Unfamiliarity of topics 21% Difficulty of questions 15% Fear of tests 13% Difficulty of language 9% Figure 6 suggests that IELTS candidates and preparation teachers have closely similar perceptions of the relative difficulties of the IELTS skills modules.

- 5. 5 Figure 6: Student and teacher perceptions of IELTS module difficulty Most difficult IELTS Module? (%) Students Teachers Reading 49% 45% Writing 24% 26% Listening 18% 20% Speaking 9 9 Interesting here is that the reading test is seen as significantly more difficult than the other skill modules. Whether this should be the case or not raises key validity questions about, for example, whether the reading activities of students once they have entered English-medium higher education, are actually more demanding than activities in the three other macro-skills. To seek answers to such questions, relevant points from the impact study face-to-face data are examined, collected from visits to a selection of the 40 study centres involved in the study. A frequently expressed view in the impact study interviews and focus groups was that it is, by definition, difficult to test reading and writing skills directly in ways that validly relate them to actual academic reading and writing activities. These tend to involve long, multi-sourced texts, handled receptively and productively, and heavy in reference and statistics, thus difficult to replicate in normal, time-constrained test contexts. Feeling that, on balance, time pressure on the IELTS reading was too great, an impact study language centre teacher focus group suggested that the test should be allocated 1 hour 20 minutes rather than the current one hour. The same focus group proposed 1½ hours for the IELTS writing test (an increase of half an hour), also on the grounds that the time pressure was both excessive and unlike the target writing events in real university life. Cultural diversity issues We may also review impact study data on IELTS test fairness for implications relating to the international education theme of the British Council Going Global conference. In response, for example, to a post-IELTS candidate questionnaire item on whether the test is appropriate for all nationalities / cultures, 73% of the 97 students responding answered positively. Most of the negative responses referred to learning approaches implied by the test, which may have been unfamiliar to the candidates, though they may well reflect the realities of academic life in the target countries concerned. From the language learning attitudes and strategies section of the student questionnaires, it emerged that 81% of the participants (n=528) claimed to try to learn about the cultures of English speakers, 75% to read for pleasure in English. And from among 156 related face-to-face comments from impact study students and teachers, only the following few were related to cultural diversity issues: occasional inappropriate test content such as questions on family to refugee candidates target culture bias of IELTS topics and materials non-European language speaker disadvantages re Latin-based vocabulary some IELTS-related micro-skills, e.g. the rhetoric of arguing one’s opinions, new to candidates. Both teachers and students, however, recognised that most such matters needed to be prepared for as intrinsic to the international education challenge facing candidates. Test anxiety and motivation Learner and teacher views on the question of test anxiety compare interestingly, as the response summaries in Figure 7 indicate. Figure 7: Candidate and teacher views of IELT test anxiety and motivation Candidates Do you worry about taking the IELTS test? Very much 41% Quite a lot 31% A little 19% Very little 9% Teachers Does the IELTS test cause stress for your students ? Yes 53% No 33% Don’t know 14% Teachers Does the IELTS motivate your students? Yes 84% No 10%

- 6. 6 Don’t know 6% The table suggests that 72% of the candidates were very much or quite a lot worried about the test, which, like the similar figure cited above for test fairness, may be seen as a somewhat predictable rather than an exceptional proportion. Rather fewer of the preparation course teachers see IELTS as causing their students stress but 84% see the test as a source of motivation for the students. The stress : motivation balance is clearly an interesting in high-stakes test washback issue. The IELTS preparation courses The courses It soon became clear that courses referred to by the selected impact study centres as “IELTS courses” came in various forms, as summarised in the table in Figure 8. Figure 8: Preparation course types (n=233) IELTS-specific 108 EAP / Study skills 86 General English with IELTS prep elements 39 Teacher questionnaire response data indicated that the three most common class sizes on IELTS preparation courses were, in order of frequency, 11 to 15, 16 to 20 and 6 to 10. IELTS test washback Ninety per cent of the participant teachers agreed that IELTS influenced the content of their lessons, 63% that it also influenced their methodology. Fifty-five of 80 open-ended responses to the question on course content refer specifically to teaching in direct support of IELTS test components. This compares with 23 responses referring to teaching IELTS formats, techniques, skills, and 7 references to administering practice tests. Seven teachers referred to student demands for such a close test focus. The message is thus that there appears to be strong IELTS washback on the preparation courses in terms of both content and methodology. But to avoid possible over-statement or over-simplification of the complex washback relationship between the courses and the test, it should be noted that responses to the content and methodology questions also featured references to teachers who emphasise improving students’ general proficiency (5), encourage study skills and wide reading (4), or set out to challenge, and confidence build ( 3 ). To theses examples of less test-oriented approaches should be added the 10% of teachers who claimed that the IELTS test did not influence their course content, and the 37% who said it did not influence their methodology Activities and micro-skills Figure 9 summarises and compares IIS candidate and teacher selections, from a list on their questionnaires of 28 classroom activities, of those that are prominent in their IELTS preparation courses. Figure 9: Candidate and teacher perceptions of prominent IELTS-preparation activities Activities Students % Teachers % Reading questions and predicting text and answer types 89 86 Listening to live, recorded talks and note-taking 83 63 Analysing text structure and organisation 74 90 Interpreting and describing statistics / graphs / diagrams 74 90 Learning quick and efficient ways of reading texts 73 93 Reading quickly to get the main idea of texts 77 96 Learning how to organise essays 82 99 Practising making a point and providing supporting examples 78 88 Group discussion / debates 83 76 Practising using words to organise a speech 74 83

- 7. 7 The influences of the IELTS test may still be seen in these activities, for example in their relationships with the four test modules (reading, listening, writing, speaking). But the potential is clear for the activities listed to facilitate classroom lessons with an interesting set of communicative activities, appropriate to students’ needs. This impression is reinforced by data from the IELTS impact study instrument for the analysis of textbook materials (Saville and Hawkey, 2004). Teachers completing the instrument on IELTS-related textbooks, found that the books covered, in particular, micro-skills such as those listed, in rank order of selections, in Figure 10. Figure 10: Perceived micro-skills coverage in IELTS preparation books Micro-skills No. of selections (N=43) identifying main points 40 identifying overall meaning 38 predicting information 36 retrieving and stating factual information 34 planning and organising information, and 34 distinguishing fact from opinion. 31 drawing conclusions 30 making inferences 29 evaluating evidence 27 identifying attitudes 23 understanding, conveying meaning through stress 15 and intonation recognising roles 12 Activities in the IELTS-related course books which the teacher analysers consider encourage communicative opportunity are, in rank order, essays, group work, pair work, reports, reviews, reading, listening, viewing for personal interest, and role plays. It is part of the theory-based validity claim of the IELTS that it reflects the language macro- and micro- skills needed in candidates’ target activities. We have seen, in the student and teacher data presented here, evidence that the IELTS preparation courses and their purpose-designed textbook materials are both influenced by the test and characterised by an emphasis on communicative activities and micro-skills. Classroom obervations A check may be made on whether the IELTS preparation classes are, in action, as they are perceived by students and teachers, using evidence from the IELTS-preparation lessons recorded and analysed using the IELTS impact study classroom observation instrument. This describes lessons in terms of: participations, timings, modes, activities and communicative opportunity time. The analyses of IELTS preparation lessons suggest that, while communicative activities across the four skills in line with the test tasks were prominent, the opportunities for learners to communicate on their own behalf varied quite significantly. General impressions from the analyses of the IELTS preparation lessons may be summarised as follows: learners who are motivated but sometimes to the extent of wanting a more narrow IELTS focus teachers making confident choices of task-based, often inter-related skills activities, involving IELTS-relevant micro-skills. materials from within and beyond the textbook, often with multi-media treatments an ethos of focused activity within a coherent, apparently learner-centred, institutional approach to preparation for IELTS multi-cultural learning and communicating activity often one of the most attractive features of mixed-nationality classes. Preparation course satisfaction There is a high 83% positive response from the 282 candidates already studying in an English- medium situation to an open-ended item on whether their IELTS preparation courses provided them with the language knowledge and skills they needed. But this positive view of preparation courses does not, it seems, mean that candidates are fully satisfied with their own performance on the courses. When all the students were asked whether they felt they were successful on the courses, only 49.5% said yes.

- 8. 8 The main criteria for success mentioned by those responding positively to this item and adding their comments (n = 141) were: perceived improvement in English proficiency level (26 responses) or skills (14 responses) and increased familiarity with the test (33 positive responses). This result suggests an interesting balance in students’ perceptions of success between improvement in their target language level, and gaining more knowledge of and practice with the IELTS test. But a closer analysis of the reasons given for perceived lack of success on preparation courses showed the candidates focusing mainly on problems of their own, rather than with the course itself (for example, in order, insufficient time or hard work, inadequate initial TL level, personal characteristics). As far as the IELTS impact study teachers are concerned, their IELTS preparation courses are considered successful compared with other courses they teach, with only 9% of the 83 teachers feeling otherwise. The reasons given by the teachers for the perceived success of their IELTS preparation courses were: clear course goals and focus (21) high student motivation (16) clearcut student achievement potential (12) and course validity (11) in terms of student target language needs, topics and skills. Conclusion This summary of the aims, approaches and selected findings of the IELTS impact study has attempted to illustrate some of the processes, problems and revelations of impact research. The general impression of the IELTS test created by the findings of the study is that the test: is recognised as a competitive, high-stakes, four skills, communicative task-based test is seen as mainly fair, though hard, especially in terms of time pressures assesses mainly target-domain content and micro-skills may need to reconsider relationships between target and test reading and writing tasks should maintain continuous test validation activities, including through impact studies. From the analyses of the qualitative and quantitative data collected, hypotheses will be developed on many aspects of IELTS impact. Findings and recommendations that are felt to need further inquiry will be compared with related IELTS research or receive it in a possible Phase 4 of the impact study. Contacts for further information on the IELTS impact study can be obtained from: www. Cambridge ESOL.org, Turner.r@ucles.org.uk or roger@hawkey58.freeserve co.uk. The impact study will be described in detail with a full analysis of data and findings in Hawkey, 2005, forthcoming. References Alderson, J. and J. Banerjee. 1996: How might Impact study instruments be validated? Paper commissioned by the University of Cambridge Local Examinations Syndicate as part of the IELTS Impact Study. Banerjee, J V. 1996: The Design of the Classroom Observation Instruments, internal report, Cambridge: UCLES. Bonkowski, F. 1996: Instrument for the Assessment of Teaching Materials, unpublished MA assignment, Lancaster University. British Council, IDP IELTS Australia, University of Cambridge ESOL Examinations. 2003: Annual Review. Hamp-Lyons, L. 2000: Social, professional and individual responsibility in language testing. System 28, 579-591. Hawkey, R. 2004: ‘An IELTS Impact Study: Implementation and some early findings’. Research Notes, 15. Cambridge: Cambridge ESOL. Hawkey, R. 2005: The theory and practice of impact studies, with special reference to the IELTS and the Progetto Lingue 2000 studies. Cambridge: Cambridge University Press. Herington, R. 1996: Test-taking strategies and second language proficiency: Is there a relationship? Unpublished MA Dissertation, Lancaster University. Horak, T. 1996: IELTS Impact Study Project, unpublished MA assignment, Lancaster University.

- 9. 9 Lazaraton, A.. 2001: ‘Qualitative research methods in language test development and Validation’, in European Language Testing in a Global Context: proceedings of the ALTE Barcelona Conference July 2001. Cambridge: Cambridge University Press. Milanovic, M and Saville, N (1996): Considering the Impact of Cambridge EFL Examinations, internal report, Cambridge: UCLES. Gardiner, K. 1999: Analysis of IELTS impact test-taker background questionnaire. UCLES IELTS EFL Validation Report . Kunnan, A. 2000: IELTS impact study project. Report prepared for UCLES. Purpura, J. 1996: ‘What is the relationship between students’ learning strategies and their performance on language tests?’. Unpublished paper given at UCLES Seminar, Cambridge, June 1996. Larsen-Freeman, D and M Long. 1991: An Introduction to Second Language Acquisition Research. London: Longman. Saville, N and Hawkey, R. 2004: A study of the impact of the International English Language Testing System, with special reference to its washback on classroom materials, in Cheng, L, Watanabe, Y and Curtis, A (eds): Concept and method in washback studies: the influence of language testing on teaching and learning, Mahwah, New Jersey: Lawrence Erlbaum Associates, Inc. Winetroube, S (1997): The design of the teachers' attitude questionnaire. Internal report. Cambridge: UCLES. Yue, W (1997): An investigation of textbook materials designed to prepare students for the IELTS test: a study of washback, unpublished MA dissertation, Lancaster University.