Syntax analysis

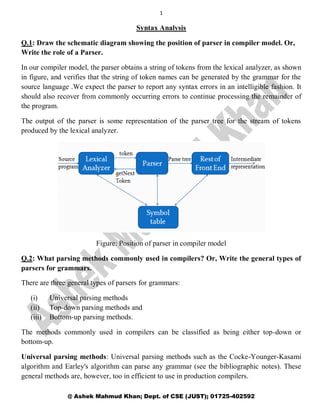

- 1. 1 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Syntax Analysis Q.1: Draw the schematic diagram showing the position of parser in compiler model. Or, Write the role of a Parser. In our compiler model, the parser obtains a string of tokens from the lexical analyzer, as shown in figure, and verifies that the string of token names can be generated by the grammar for the source language .We expect the parser to report any syntax errors in an intelligible fashion. It should also recover from commonly occurring errors to continue processing the remainder of the program. The output of the parser is some representation of the parser tree for the stream of tokens produced by the lexical analyzer. Figure: Position of parser in compiler model Q.2: What parsing methods commonly used in compilers? Or, Write the general types of parsers for grammars. There are three general types of parsers for grammars: (i) Universal parsing methods (ii) Top-down parsing methods and (iii) Bottom-up parsing methods. The methods commonly used in compilers can be classified as being either top-down or bottom-up. Universal parsing methods: Universal parsing methods such as the Cocke-Younger-Kasami algorithm and Earley's algorithm can parse any grammar (see the bibliographic notes). These general methods are, however, too in efficient to use in production compilers.

- 2. 2 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Top-down parsing methods: Top-down methods build parse trees from the top (root) to the bottom (leaves). Bottom-up parsing methods: Bottom-up methods start from the leaves and work their way up to the root. Q.3: What types of error can program contain? Or, Discuss errors that may occur doing compilation. Or, Explain error seen by each phase. We know that program may contain errors at many different levels. So error can be- (i) Lexical errors (ii) Syntactic errors (iii) Semantic errors (iv) Logical errors Lexical errors: Lexical errors include misspellings of identifiers, keywords, or operators e.g., the use of an identifier ellipse Size instead of ellipse Size—and missing quotes around text intended as a string. Syntactic errors: It may occur due to grammatical error in statements of a program. For example an arithmetic expression with unbalanced parenthesis as (((a+a)*c)-d). Another example in Pascal statement- If(a==b) then stmt Semantic errors: Semantic errors include type mismatches between operators and operands. An example is a return statement in a Java method with result type void. For example, in c statement int c= a+2.5 Logical errors: it may occur due to an infinitely recursive call. For example in c statement- for (i=0; i<i+1; i++) { stmt } Q.4: Why is much of the error detection and recovery in a compiler conte around the syntax analysis phase? There are two reasons. They are given below –

- 3. 3 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 1. Many errors are syntactic in nature or one exposed when the stream of tokens coming from the lexical analyzer disobeys the grammatical roles defining the programming language. 2. The precision of modern parsing methods. Q.5: What are the goals of error handler? The error handler in a parser has goals that are simple to state but challenging to realize: • Report the presence of errors clearly and accurately. • Recover from each error quickly. • Add minimal over head to the processing of correct programs. Q.6: What are the error detection and recovery? Error detection: Error detection means detect error from the source program by an error handler. Error handlers report the presence of an error. Error handler should report the place in the source program where an error is detected. Detecting error may be lexical, syntactic, semantic and logical. Error recovery: Error recovery means recover strategy that recover detected error from the source program. The balance of this section is devoted to the following recovery strategies: panic-mode, phrase-level, error-productions, and global-correction. Q.7: Describe different error recovery strategic. Or, Discuss error recovery. There are many different general strategic that a parser can employ recover from a syntactic error. These are: 1. Panic-Mode 2. Phrase-level 3. Error-productions and 4. Global-correction. Panic mode recovery: This is the simplest method to implement and can be used by most parsing methods. Discard input symbol one at a time until one of designated set of synchronization tokens is found. Phrase level recovery: Replacing a prefix of remaining input by some string that allows the parser to continue.

- 4. 4 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Error productions: Using an error production we can generate appropriate error diagnostic to indicate the erroneous constructs that has been recognized in the input. Global correction: Choosing minimal sequence of changes to obtain a globally least-cost correction. Q.8: Define context-free grammar with example. Or, Define with example the four quantities of a grammar. Or, Define context-free grammar. Write a CFG for arithmetic expressions. Context-free grammar: A grammar consists of collection of rules called productions. Each rule appears as a line in the grammar, comprising a symbol and a string separated by an arrow. The symbol is called a variable. The string consists of variables and other symbols. A A+1 B A B # Formal definition of context-free Grammar: A context-free Grammar is a 4-tuple (V, ∑, R, S), where- 1. V is a finite set called variables, 2. ∑ is a finite set, disjoint from V, called the terminals, 3. R is a finite set of rules, with each rule being a variable and a string of variables and terminals, 4. S Є V is the start variable. For example, a CFG for arithmetic expression is: G= ({E,A},{id,+,-,*,/,(,) },P,E) where, P is defined as follows: E -> E A E | (E) | -E | id A-> + | - |* | / | Components: There are four components in a grammatical description of a language- 1. Terminals: There is a finite set of symbols that form the strings of the language being defined. These symbols are called terminals or terminal symbols. 2. Non-Terminals: There is a finite set of variables where each variable represents a language; i.e. a set of strings. These variables are called non-terminals.

- 5. 5 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 3. Start Symbol: One of the variable represents the language being defined it. These variables is called start symbol. 4. Production or Rules: There is a finite set of production or rules that represent the recursive definition of the language. Each production consists of- A variable that is being defined by the production. This variable is often called the head of the production The production symbol, and A string of zero or more terminals and variables. (Body of production). Q.9: Define recursive inference. Recursive inference is a procedure to infer that certain strings are in the language of a certain variable. It uses the roles from body to head. Q.10: Define palindrome. A palindrome is a string that reads the same forward and backward. Put another way, string W is a palindrome if and only if W=WR . Let us consider the palindromes with alphabet {0,1}. This language includes strings like 0110, 11011 and t. Q.11: Define derivations. Define different types of derivation with example. Derivations are a procedure that determines terminal string from start symbol by replacing right side of a production in terms of terminals and/or non terminals of the other productions. For example, consider the following grammar for arithmetic expression: E -> E + E | E * E | -E | (E) | id Now, E => -E => -(E) => -(id) Or, E => -(id) where, the symbol => means derives in one step and the symbol =* > means derives in zero or more steps. Left-most derivation: If we always replace the leftmost variable in a string, then the resulting derivation is called the leftmost derivation. For example, E => -E => -(E) => -(E+E) => -(id+E)=>-(id+id) Or, E=>-(id+id) Rightmost derivation: If we always replace the rightmost variable in a string, then the resulting derivation is called the rightmost derivation. For example,

- 6. 6 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 E => (E) => (E+E) => (E+id)=> (id+id) Or, E=> (id+id) Parse trees: -(id+id) E => -E => -(E) => -(E+E) => -(id+E)=>-(id+id) Q.12: Define sentential forms. Define different types of sentential form with example. Or, Explain sentential form for a grammar. Sentential forms: Derivations from the start symbol produce strings and these are called sentential forms. Formally, if G=(V,T,P,S) is a CFG, then an string 𝛼 in (V∪T)* such that S =* > 𝛼 is a sentential form. For example, consider the following grammar for arithmetic expression: E -> E + E | E * E | -E | (E) | id The string –(id+id) is a sentence of the above grammar because there is a derivation E => -E => -(E) => -(E+E) => -(id+E)=>-(id+id) Where string E,-E,-(E),……..,-(id+id) appearing in this derivation are all sentential form of this grammar. Q.13: Define context-free language. What do you mean by equivalent? Context-free language: A language that can be generated by a grammar is said to be a context-free language. That is, L(G)={W in T*|S =* >W} Where, W is any string in terminals T*

- 7. 7 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Equivalent grammar: If two grammars generated the same language, the grammars are said to be equivalent. Q.14: Consider the following grammar for list structures. S→ a | A | (T) 𝑇 → T, S | S In the above grammar find the leftmost and right most derivation for: (i) (a,(a,a)) (ii) (((a,a),n,(a)),a) (i) Leftmost derivation: Rightmost derivation: (ii) Leftmost derivation: Rightmost derivation:

- 8. 8 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Q.15: What do you mean by parse tree? Parse tree: A parse tree pictorially shows how the start symbol of a grammar derives a string in the language. If non-terminal A has a production A → XYZ, then a parse tree may have an interior node labeled A with three children labeled X,Y and Z from left to right: A Properties of parse tree: (i) The root labeled by the start symbol. (ii) Each leaf is labeled by a token or by t. (iii) Each internal node is labeled with a non-terminal. (iv) Each use of a rule in a derivation explains how to generate children in the parse tree from the parents. Q.16: Define Yield of tree and parsing. Yield of tree: The leaves of a parse tree read from left-to-right from the yield of the tree, which is the string generated or derives from the non-terminal at the root of the parse tree. Parsing: The process of finding a parse tree for a given string of tokens is called parsing that string. Q.17: Design parse tree for id | id*id. Consider the following grammar for arithmetic expression: E→EAE| (E) |-E| id A→ + | - | * | / | Parse tree for id | id*id is shown in below: Q.18: Consider the sequence of the parse trees for the following example. E => -E => -(E) => -(E+E) => -(id+E)=>-(id+id)

- 9. 9 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Q.19: Consider the grammar: (1)s→ icts (2)s→ictscs (3)s→a (4)c→b Here i, t and c stand for if, then, and else. C and s stand for “conditional” and “statement”. Construct a left most derivation for the sentence w = ibtibtaca. Leftmost derivation for the sentence w= ibtibtaca is given below- Q.20: Consider the grammar: E→ EAE | (E) | -E | id A→ + | - | * | / | Construct two parse tree for the sentence id+id*id The two parse trees for the sentence id+id*id are shown in figure: Q.21: What do you understand by ambiguous grammar?

- 10. 10 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Ambiguous Grammar: A string ѡ is derived ambiguously in context-free grammar G, if it has two or more different leftmost derivations. A grammar G is ambiguous if it generates some string ambiguously. (1) E→E+E →E+E*E (2) E→E*E →E+E*E Q.22: Define unambiguous grammar. Unambiguous grammar: A CFG is unambiguous if each string in T* has at most one parse tree. Q.23: Show that the following ambiguous: E→E+E | E*E | id To show that the grammar is ambiguous, we have to find a string w for which we have two leftmost derivations and hence two parse trees. Let, w = id+ id * id The two leftmost derivations are: The two parse trees for the two derivations are: As, we have two parse trees for the string w. the grammar is ambiguous. Q.24: When regular expression and CFG are equivalent?

- 11. 11 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 If regular expression and CFG describe the same language then this situation there are equivalent. For example, the regular expression (a|b)* abb and the grammar. A0→ aA0|bA0|aA1 A1→aA2 A2→ bA3 A3→ t Describe the same language, the set of strings of a‘s and b‘s ending in abb. Q.25: Why use regular expressions to define the lexical syntax of a language? There are several reasons: 1. Separating the syntactic structure of a language in to lexical and non-lexical parts provides a convenient way of modularizing the frontend of a compiler in to two manageable-sized components. 2. The lexical rules of a language are frequently quite simple, and to describe them we do not need a notation as powerful as grammars. 3. Regular expressions generally provide a more concise and easier-to-understand notation for tokens than grammars. 4. More efficient lexical analyzers can be constructed automatically from regular expressions than from arbitrary grammars. Q.26: Distinguish between the regular expression and CFG. 1. Regular expressions are most useful for describing the structure of lexical constructs such as identifier, constants, keyword and so forth. Whereas grammars are most useful in describing nested structures such as balanced parentheses, matching, if then else‘s and so on. 2. Regular expression generally provides a more concise and easier to understand notation for tokens then grammars. 3. More efficient lexical analyzers can be constructed automatically from regular expressions than from arbiter grammars. Q.27: Define left and right recursion. Left recursion: A grammar is left recursion if it has a non terminal A such that there is a derivation A→+ A𝛼 for some string 𝛼. For example consider the following grammar for simple arithmetic expression: E→ EAE | (E) | -E | id A→ + | - | * | / | is left recursive because it follows the A→+ A𝛼 condition.

- 12. 12 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Right recursion: A grammar is right recursive if it has a non terminal R such that there is a derivation R→+ 𝛼R for some string 𝛼. Q. 28: Consider the following grammar: E→ E+T | T T→ T*F | F F→ (E) | id, Eliminate the immediate left recursion. Ans: Eliminate the immediate left recursion to the production for E and then for T, we get Q. 29: Consider the following grammar: E→ EAE | (E) | -E | id A→ + | - | * | / | , Eliminate the immediate left recursion. Ans: Eliminate the immediate left recursion to the production for E, we obtain Q. 30: Consider the following grammar: S→ Aa | b A → Ac | Sd | t , Eliminate the indirect left recursion. Ans: we know that, eliminating left recursion do not work if the grammar, the production t- production. In this above grammar, the production A → t turns out to be hard less. We substitute the s- production in A→ Sd to obtain the following A production. A → Ac | Aad | bd | t Eliminating the immediate left recursion among the A productions yields the following grammar. S→ Aa | b

- 13. 13 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Q. 31: Define left factoring with example. Left factoring is a grammar transformation that is useful for producing a grammar suitable for predictive ,or top-down, parsing .When the choice between two alternative ^-productions is not clear, we may be able to rewrite the productions to defer the decision until enough of the input has been seen that we can make the right choice. For example, if we have the two productions- stmt → if expr then stmt else stmt On seeing the input if, we cannot immediately tell which production to choose to expand stmt. However, we may defer the decision by expanding A to aA'. Then, after seeing the input derived from a, we expand A' to ft or to ft .That is, left-factored, the original productions become A→ aA', A' ^ ft | ft Q. 32: Consider the following grammar: S→ iEts | iEtses | a E→ b, find the left factor grammar. Ans: Left factor, this above grammar becomes: S → iEtss' | a s' → es| t E → b Q. 33: Explain the distinction between top down parsing and bottom up parsing. Ans: The distinction between top down parsing and bottom up parsing are given below- Top down parsing attempts to construct the parse tree for some sentence of the language by starting at the root of the parse tree. Whereas, bottom up attempts to build the parse tree by string at the leafs of the tree. Top down parsers are generally easy to write manually. Whereas, bottom up parsers are usually created by parser generators and tend to be faster. Q. 34: Explain the recursive descent parsing with example. Recursive descent parsing: Recursive descent parsing is a top down method of syntax analysis in which we execute a set of recursive procedures to process the input. The recursive name comes from the recursive nature of a grammar and the descent name comes the fact that it builds the parse tree top down by starting at the root (start symbol).

- 14. 14 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 For example, consider the grammar: S→ 𝑐𝐴𝑑 A→ 𝑎𝑏|𝑎 and the input string w=cad. To construct a parse tree by using recursive descent parsing. Initially, create a tree consisting of a single node labeled S. an input pointer points to c, the first symbol of w. Q. 35: List the steps of constructing transition diagram for a predictive parser. The steps of constructing transition diagram for a predictive parser is given below- To construct the transition diagram for a predictive parser from a grammar, first eliminate left recursion from the grammar and then left factor he grammar. Then for each non-terminal A do the following: (i) Create an initial and final (return) state. (ii) For each production A →X1X2……….Xn create a path from the initial to the final state, with edges labeled X1,X2,……Xn Q. 36: Explain Non-recursive Predictive Parsing. Non-recursive Predictive Parsing: A non-recursive predictive parser can be built by maintaining a stack explicitly, rather than implicitly via recursive calls. The parser mimics a left most derivation. If w is the input that has been matched so far, then the stack holds a sequence of grammar symbols a such that S wd Input buffer: The input buffer contains the string to be parsed follow by $, a symbol used as a right end marker to indicate the end of the input string. Stack: The stack contains a sequence of grammar symbols with $ on the bottom indicating the bottom of the stack. Parsing table: The parsing table is a two dimensional array M[A,a] where A is a terminal and a is a terminal or the symbol $.

- 15. 15 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Input a + b $ Fig: Model of a non-recursive predictive parser. To parser with the table, we use the following rules (i) If X=a=$, the parser half and announces successful completion of the parsing. (ii) If X=a≠$, then pop a and advance to next input symbol. (iii) Pop X and push the role in the table indexed at M[X,a]. M[X,a] →{𝑋 →ABC} Q. 37: Consider the grammar: E→TE E→ +𝑻𝑬 | 𝒕 T → 𝑭𝑻 T → *FT | t F → (𝑬)| id (i) Construct the predictive table for the grammar. (ii) Show that the stack content of predictive parsing for input sentence id+id*id. (i) The predictive table for the grammar is shown below by using FIRST and FOLLOWS function: Non- terminal Input SYMBOL id + * ( ) $ E E→ 𝑇𝐸 E→ 𝑇𝐸 E E→ 𝑇𝐸 E→ 𝑡 E→ 𝑡 T T→ 𝐹𝑇 T→ 𝐹𝑇 T T→ 𝑡 T→∗ 𝐹𝑇 T→ 𝑡 E→ 𝑡 F F → 𝑖𝑑 F→ (𝐸)

- 16. 16 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 (ii) The stack content of predictive parsing for input sentence id + id * id is given below: Stack Input Output $E id + id * id$ E→ 𝑇𝐸 $E T id + id * id$ T→ 𝐹𝑇 $E T F id + id * id$ F → 𝑖𝑑 $E T id id + id * id$ $E T + id * id$ T→ 𝑡 $E + id * id$ E→ +𝑇𝐸 + $E T+ + id * id$ $E T id * id$ T→ 𝐹𝑇 $E T F id * id$ F → 𝑖𝑑 $E T id id * id$ $E T * id$ T→ 𝐹𝑇 $E T F* * id$ $E T F id$ F → 𝑖𝑑 $E T id id$ $E T $ T→ 𝑡 $E $ E→ 𝑡 $ $ Q. 38: Write the rule to compute FIRST (X). To compute FIRST(X ) for all grammar symbols X, apply the following rules until no more terminals or e can be added to any FIRST set . 1. If X is a terminal, then FIRST(X) = {X}. 2. If 𝑋 → 𝒕 is a production, then FIRST (X) is {t}. 3. If X is a non-terminal, and X= Y1Y2………Yk is a production, then place a in FIRST (X) if for some i, a is in FIRST (X) and t is in all of FIRST (Y1) …….FIRST (Yi-1). Q. 39: Write the rule of compute FOLLOW (A) To compute FOLLOW (A) for all non-terminals A, apply the following rules until nothing can be added to any FOLLOW set. 1. Place $ in FOLLOW (s), where s is the start symbol and $ is the input right end marker. 2. If there is a production A→ 𝛼B𝛽, then everything FIRST (𝛽) except for t is placed in FOLLOW (B). 3. If there is production A→ 𝛼B or a production A→ 𝛼B𝛽 where FIRST (B) contains t, then everything in FOLLOW (A) in FOLLOW (B).

- 17. 17 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Q. 40: Consider the following grammar: E→TE E→ +𝑻𝑬 | 𝒕 T → 𝑭𝑻 T → *FT | t F → (𝑬)| id Compute FIRST and FOLLOW sets FIRST (E) = FIRST (T) = FIRST (F) = { (,id } FIRST (E) = {+, t} FIRST (T) = {*, t} The first set for any terminal contains only the terminals FOLLOW (E) = FOLLOW (E) = { ), $ } FOLLOW (T) = FOLLOW (T) = { +, ), $ } FOLLOW (F) = { +, *, ), $ } Q. 41: Consider the grammar: E→TE E→ +𝑻𝑬 | 𝒕 T → 𝑭𝑻 T → *FT | t F → (𝑬)| id construct first and follow for the above grammar. FIRST (E) = FIRST (T) = FIRST (F) = { (,id } FIRST (E) = {+, t} FIRST (T) = {*, t} FOLLOW (E) = FOLLOW (E) = { ), $ } FOLLOW (T) = FOLLOW (T) = { +, ), $ } FOLLOW (F) = { +, *, ), $ }. Q. 42: What is LL(l) grammar? A grammar whose parsing table has no multiply defined entries is called LL (l) grammar. Q. 43: What do you mean by bottom-up parsing? Or, what do you understand by shift- reduce parsing? Or, Describe shift-reduce parsing technique with proper example.

- 18. 18 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 Bottom-up parsing: A bottom-up parse corresponds to the construction of a parse tree for an input string beginning at the leaves (the bottom) and working up towards the root (the top). This section introduces a general style of bottom-up parsing known as shift-reduce parsing. The largest class of grammars for which shift-reduce parsers can be built, the LR grammars. For example, consider the grammar: S→ aABc E→ E+E | E*E| (E)| id A→ Abc | b and string id + id * id B→ d String from a sentence of the grammar we attempt to reduce the sentence to the start symbol: abbcde aAbcde (A→ 𝑏) aAde (A→ 𝐴𝑏𝑐) aABe (B→ 𝑑) S (S→ 𝑎𝐴𝐵𝑒) These reduction trace out the following right most derivation in reverse. S ⟹aABe ⟹ 𝑎𝐴𝑑𝑒 ⟹ 𝑎𝐴𝑏𝑐𝑑𝑒 ⟹ 𝑎𝑏𝑏𝑐𝑑𝑒 E⟹ 𝐸 ∗ 𝐸 ⟹ 𝐸 ∗ 𝑖𝑑 ⟹ 𝐸 + 𝐸 ∗ 𝑖𝑑 ⟹ 𝐸 + 𝑖𝑑 ∗ 𝑖𝑑 ⟹ 𝑖𝑑 + 𝑖𝑑 ∗ 𝑖𝑑 Q. 44: Define handles with proper example. Handles: Informally a handle of a string is a substring that matches the right side of a production. Formally, a handle of some right sentential form Y is a production A→ 𝛽 and a position is 𝛾 where 𝛽 can be found and replaced with A to produce the previous right sentential form. For example, abbcde has a handle A→b at position 1. And aAbcde has a handle A→Abc at position 2. For example, consider the following grammar: E→ E+E E→E*E E→ (E) E→ id and a right most derivation of id1+id2*id3 is: E⟹ E+E

- 19. 19 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 ⟹ E+E*E ⟹ E+E* id3 ⟹ E+id2*id3 ⟹ id1+id2*id3 The handles for each sentential form have been underlined. Q. 45: Define handle Pruning. Handle Pruning: A right most derivation in reverse can be obtained by ―handle pruning‖. For example, consider the grammar: E→ E+E E→E*E E→ (E) E→ id, and the input string id1+id2*id3 The sentence of reductions shown in fig. reduces id1+id2*id3 to the start symbol E. Right sentential form Handle Reducing production id1+id2*id3 id1 E→ id E+id2*id3 id2 E→ id E + E *id3 id3 E→ id E + E * E E * E E→ E * E E + E E + E E→ E + E E Fig. Reduction mode by shift reduce parser Q.46: Write the advantages of handle Pruning. There are two problems that must be solved if we are to parse by handle Pruning: 1. The first is to locate the substring to be reduced in a right sentential form. 2. The second is to determine what production to chosen in case there is more than one production with that substring on the right side. Q.47: Briefly explain stack implementation of shift-reduce parsing. A convert way to implement a shift reduce parser is to use a stack to hold grammar symbols and an input buffer to hold the string w to be parsed. We use $ to make the bottom of the stack and also right end of the input. Initially, the stack is empty and the string w is on the input as follows:

- 20. 20 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 STACK INPUT $ W$ The parser operates by shifting zero or more input symbols onto the stack until a handle 𝛽 to the left side of the stack. The parser then reduces 𝛽 to the left side of the appropriate production. The parser repeats this cycle until it has detected an error or until the stack contains the start symbol and the input is empty. STACK INPUT $ S $ Q.48: Write the operations of shift reduce parser. While the primary operations of the parser are shift and reduce, there are actually four possible actions a shift-reduce parser can make: (i) shift (ii) Reduce (iii) Accept (iv) Error. Shift: The parser shifts when it needs the next input symbol on the stack. Reduce: The parser reduces when it sees a handle on the top of the stack. Accept: The parser accepts when the input is empty and the start symbol on the stack. Error: An error occurs when a configuration occurs with the stack and input that is unrecognized. Q.49: Define variable prefixes. During shift reduce parsing, the stack has various configurations. These are called prefixes of the grammar. Q.50: Write the problems in shift reduce parsing. The two most common problems are the shift reduce conflict and the reduce/reduce conflict. Shift reduce conflicts occur when the parser cannot decide whether to shift a token onto the stack or reduce a Handel. Reduce/reduce conflicts occur when the parser cannot decide which reduction to make. Q.51: Show that the stack control of shift reduced parsing for input sentence: (i) id1 * id2 * id3 * (ii) id1 + id2 * id3 and the grammar is: E→ E+E | E*E| (E)| id Given the grammar is: E → E+E | E*E| (E) | id

- 21. 21 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 The right most derivation for input sentence id1 * id2 * id3 * is: The stack control of shift reduced parsing for input sentence id1 * id2 * id3 * is shown in table- Stack Input Action (01) $ id1 * id2 * id3 * $ Shift (02) $ id1 * id2 * id3 * $ Reduce by E→ id (03) $ E * id2 * id3 * $ Shift (04) $ E* id2 * id3 * $ Shift (05) $ E* id2 * id3 * $ Reduce by E→ id (06) $ E*E * id3 * $ Shift (07) $ E*E* id3 * $ Shift (08) $ E*E*id3 * $ Reduce by E→ id (09) $ E*E*E * $ Shift (10) $ E*E*E* $ Reduce by E→ E*E (11) $ E*E* $ Reduce by E→ E*E (12) $ E* $ Error Q.52: Explain operator precedence parsing with example. Operator precedence parsing: The operator precedence parsing is an effective technique for a small class of grammar with no production right side is t or has two adjacent no terminals. These parsers rely on the following three precedence relations: Relation Meaning a <· b a yields precedence to b a =· b a has the same precedence as b a ·> b a takes precedence over b These operator precedence relations allow delimiting the handles in the right sentential forms: <· marks the left end, =· appears in the interior of the handle, and ·> marks the right end. For example, the following operator precedence relations can be introduced for simple expressions:

- 22. 22 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 id + * $ id ·> ·> ·> + <· ·> <· ·> * <· ·> ·> ·> $ <· <· <· The stack controls are the parsing of the string id+id*id. STACK INPUT ACTION (1) id+id*id $ Shift (2) id +id*id $ Reduce (3) +id*id $ Shift (4) + id*id $ Shift (5) + id *id $ Reduce (6) + *id $ Shift (7) +* id $ Shift (8) +*id $ Reduce (9) +* $ Reduce (10) + $ Reduce (11) $ Q.53: What are the disadvantages of operator precedence parsing? Operator precedence parsing has a number of disadvantages: 1. It is hard to handle is token. 2. Worse are cannot always be sure the parser accepts exactly the desired language. 3. Finally only a small class of grammars can be parsed using operator precedence techniques. Q.54: What are the advantages of operator precedence parsing? Because of its simplicity numerous compilers using operator precedence parsing technique for expression have been built successfully. Q. 55: Define and classify LR parser. LR parser: LR parser is an efficient bottom-up syntax analyzer that can be used to large class of CFG. The technique is called LR parsing. Where ‗L‘ stands for left-to-right scanning of the input, ‗R‘ stands for constructing right-most derivations in reverse.

- 23. 23 @ Ashek Mahmud Khan; Dept. of CSE (JUST); 01725-402592 The LR parsers are classified according to the techniques used in constructing LR parsing table for grammars. They are: 1. Simple LR parser (SLR): It is easiest to important, but less powerful of all. 2. Canonical LR parser (CLR): It is most powerful and the most expensive. 3. Look ahead LR parser (LLR): It is intermediate in power and cost between the other two.