Resume_Abhinav_Hadoop_Developer

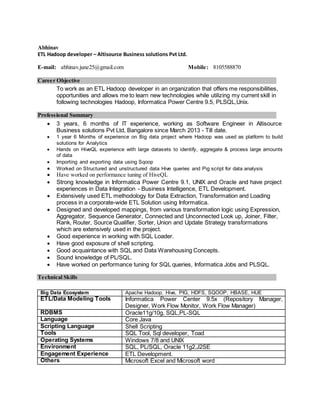

- 1. Abhinav ETL Hadoop developer – Altisource Business solutions Pvt Ltd. E-mail: abhinav.june25@gmail.com Mobile: 8105588870 Career Objective To work as an ETL Hadoop developer in an organization that offers me responsibilities, opportunities and allows me to learn new technologies while utilizing my current skill in following technologies Hadoop, Informatica Power Centre 9.5, PLSQL,Unix. Professional Summary 3 years, 6 months of IT experience, working as Software Engineer in Altisource Business solutions Pvt Ltd, Bangalore since March 2013 - Till date. 1 year 6 Months of experience on Big data project where Hadoop was used as platform to build solutions for Analytics Hands on HiveQL experience with large datasets to identify, aggregate & process large amounts of data Importing and exporting data using Sqoop Worked on Structured and unstructured data Hive queries and Pig script for data analysis Have worked on performance tuning of HiveQL Strong knowledge in Informatica Power Centre 9.1, UNIX and Oracle and have project experiences in Data Integration - Business Intelligence, ETL Development. Extensively used ETL methodology for Data Extraction, Transformation and Loading process in a corporate-wide ETL Solution using Informatica. Designed and developed mappings, from various transformation logic using Expression, Aggregator, Sequence Generator, Connected and Unconnected Look up, Joiner, Filter, Rank, Router, Source Qualifier, Sorter, Union and Update Strategy transformations which are extensively used in the project. Good experience in working with SQL Loader. Have good exposure of shell scripting. Good acquaintance with SQL and Data Warehousing Concepts. Sound knowledge of PL/SQL. Have worked on performance tuning for SQL queries, Informatica Jobs and PLSQL. Technical Skills Big Data Ecosystem Apache Hadoop, Hive, PIG, HDFS, SQOOP, HBASE, HUE ETL/Data Modeling Tools Informatica Power Center 9.5x (Repository Manager, Designer, Work Flow Monitor, Work Flow Manager) RDBMS Oracle11g/10g, SQL,PL-SQL Language Core Java Scripting Language Shell Scripting Tools SQL Tool, Sql developer, Toad Operating Systems Windows 7/8 and UNIX Environment SQL, PL/SQL, Oracle 11g2,J2SE Engagement Experience ETL Development. Others Microsoft Excel and Microsoft word

- 2. PROJECTS UNDERTAKEN: Project: iCasework NOV 2015 – Jun2016 Location: Bangalore Client: Ocwen Environment: Hadoop ,HDFS, Hive, Pig, Sqoop, Java, Oracle 11g,Shell Scripting Team Size: 4 iCasework is an complaintand inquiryrelated management tool which Ocwen has used to track all his customer and vendor related complaints and queries. Ocwen is serving more than 5 Million loans and has implemented Hadoop Framework to analyze various datasets. We use to get data from iCasework in the form of Comma Separated Files by connecting to their HTTPS server using shell Script and also from the relational database. Moved all History data flat files to HDFS for further processing Wrote MapReduce code for processing History Data. Involved in Core Concepts of Hadoop HDFS, Map Reduce (Like Job Tracker, Task tracker). Created Hive tables to store the processed results in a tabular format. Developed the SQOOP scripts in order to make the interaction between Hive and Oracle. Completely involved in the requirement analysis phase. Involved in Import and Export by using Sqoop for job entries. Involved in writing the shell script for connecting to iCasework server using Curl Utility and downloading the files, which we used as source Involved in complete automation of the project Project: Database Migration Jan 2015 – OCT 2015 Location: Bangalore Informatica Power Center 9.5, Oracle, Unix, Shell Scripting Teamsize: 2 This projects aims at removing dependency of one database from another. Previously we were refreshing target database through Materialized views, but as data starts growing Materialized views starts taking a lot of time. Source database is getting refreshed after every 15 minutes by the means of Flat files. So, we removed the dependency by directly treating those flat files as source. Responsibilities: Worked as ETL Developer. Created mappings using different sources with Expression, Aggregator, Sequence Generator, Look-up, Joiner, Filter, Rank, Router, Sorter, Union and update strategy transformations in the project. Wrote generic PLSQL procedure which will handle logical deletion for 176 tables. Created Mapping for the automation of creation of parameter file. Created Mappings for Slowly Changing Dimension tables. Captured all the failure scenarios and have given solution to them. Wrote Shell Script to handle file structure. Scheduled the whole process according to the user needs. Co-ordinate with the QA team in system testing. UAT and Production support.

- 3. Education: Degree College/School Passing Year Percentage University/Board Bachelor of Technology(ECE) MMEC, Mullana 2008-2012 75.8% Maharishi Markendeshwer University,Mullana Haryana XII S.A Jain Senior Model, Ambala City 2007-2008 83% C.B.S.E Board X S.A Jain Senior Model, Ambala City 2005-2006 83.4% C.B.S.E Board I have given Elitmus test and have secured pH Test Percentile = 93.34 Extra-Curricular Activities: Outstanding contribution in organizing and participating in events in college like quiz, sports events, and dance. Participated in inter college cricket tournament. Participated in Railway exhibition as a TEAM LEADER. Has won many quizzes in school and college level. Personal Details: Father’s Name : Mr. Rakesh Mohan Marital Status : Single Language Known : English & Hindi Current Location : Bangalore Date Of Birth : June 25, 1991