[한국어] Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games

•

9 recomendaciones•2,388 vistas

The document summarizes a presentation on a paper about using multiagent bidirectional-coordinated networks (BiCNet) to develop AI agents that can learn to play combat games in StarCraft. The paper introduces BiCNet, which uses bidirectional RNNs to allow agents to communicate and coordinate their actions. Experiments show BiCNet agents outperform independent and other cooperative agents in different combat scenarios in StarCraft, developing strategies like focus firing and coordinated attacks. Visualizations of agent coordination and additional areas for investigation are also discussed.

Denunciar

Compartir

Denunciar

Compartir

Descargar para leer sin conexión

Recomendados

Recomendados

Más contenido relacionado

La actualidad más candente

La actualidad más candente (20)

SSII2021 [SS2] Deepfake Generation and Detection – An Overview (ディープフェイクの生成と検出)![SSII2021 [SS2] Deepfake Generation and Detection – An Overview (ディープフェイクの生成と検出)](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![SSII2021 [SS2] Deepfake Generation and Detection – An Overview (ディープフェイクの生成と検出)](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

SSII2021 [SS2] Deepfake Generation and Detection – An Overview (ディープフェイクの生成と検出)

Automate your Job and Business with ChatGPT #3 - Fundamentals of LLM/GPT

Automate your Job and Business with ChatGPT #3 - Fundamentals of LLM/GPT

Siyaset Bilimi ve Diğer Bilim Dallarıyla İlişkileri

Siyaset Bilimi ve Diğer Bilim Dallarıyla İlişkileri

マジシャンズデッド ポストモーテム ~マテリアル編~ (株式会社Byking: 鈴木孝司様、成相真治様) #UE4DD

マジシャンズデッド ポストモーテム ~マテリアル編~ (株式会社Byking: 鈴木孝司様、成相真治様) #UE4DD

Destacado

Destacado (20)

[한국어] Neural Architecture Search with Reinforcement Learning![[한국어] Neural Architecture Search with Reinforcement Learning](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[한국어] Neural Architecture Search with Reinforcement Learning](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[한국어] Neural Architecture Search with Reinforcement Learning

[BIZ+005 스타트업 투자/법률 기초편] 첫 투자를 위한 스타트업 기초상식 | 비즈업 조가연님 ![[BIZ+005 스타트업 투자/법률 기초편] 첫 투자를 위한 스타트업 기초상식 | 비즈업 조가연님](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[BIZ+005 스타트업 투자/법률 기초편] 첫 투자를 위한 스타트업 기초상식 | 비즈업 조가연님](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[BIZ+005 스타트업 투자/법률 기초편] 첫 투자를 위한 스타트업 기초상식 | 비즈업 조가연님

Machine Learning Foundations (a case study approach) 강의 정리

Machine Learning Foundations (a case study approach) 강의 정리

Similar a [한국어] Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games

Similar a [한국어] Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games (20)

BOOSTING ADVERSARIAL ATTACKS WITH MOMENTUM - Tianyu Pang and Chao Du, THU - D...

BOOSTING ADVERSARIAL ATTACKS WITH MOMENTUM - Tianyu Pang and Chao Du, THU - D...

Improving the Performance of MCTS-Based μRTS Agents Through Move Pruning

Improving the Performance of MCTS-Based μRTS Agents Through Move Pruning

QMIX: monotonic value function factorization paper review

QMIX: monotonic value function factorization paper review

MLSEV. Logistic Regression, Deepnets, and Time Series

MLSEV. Logistic Regression, Deepnets, and Time Series

Create a Scalable and Destructible World in HITMAN 2*

Create a Scalable and Destructible World in HITMAN 2*

DutchMLSchool. Logistic Regression, Deepnets, Time Series

DutchMLSchool. Logistic Regression, Deepnets, Time Series

Pixelor presentation slides for SIGGRAPH Asia 2020

Pixelor presentation slides for SIGGRAPH Asia 2020

Último

Último (20)

Top profile Call Girls In Vadodara [ 7014168258 ] Call Me For Genuine Models ...![Top profile Call Girls In Vadodara [ 7014168258 ] Call Me For Genuine Models ...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![Top profile Call Girls In Vadodara [ 7014168258 ] Call Me For Genuine Models ...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

Top profile Call Girls In Vadodara [ 7014168258 ] Call Me For Genuine Models ...

Fun all Day Call Girls in Jaipur 9332606886 High Profile Call Girls You Ca...

Fun all Day Call Girls in Jaipur 9332606886 High Profile Call Girls You Ca...

Gulbai Tekra * Cheap Call Girls In Ahmedabad Phone No 8005736733 Elite Escort...

Gulbai Tekra * Cheap Call Girls In Ahmedabad Phone No 8005736733 Elite Escort...

Charbagh + Female Escorts Service in Lucknow | Starting ₹,5K To @25k with A/C...

Charbagh + Female Escorts Service in Lucknow | Starting ₹,5K To @25k with A/C...

+97470301568>>weed for sale in qatar ,weed for sale in dubai,weed for sale in...

+97470301568>>weed for sale in qatar ,weed for sale in dubai,weed for sale in...

Vadodara 💋 Call Girl 7737669865 Call Girls in Vadodara Escort service book now

Vadodara 💋 Call Girl 7737669865 Call Girls in Vadodara Escort service book now

Top profile Call Girls In dimapur [ 7014168258 ] Call Me For Genuine Models W...![Top profile Call Girls In dimapur [ 7014168258 ] Call Me For Genuine Models W...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![Top profile Call Girls In dimapur [ 7014168258 ] Call Me For Genuine Models W...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

Top profile Call Girls In dimapur [ 7014168258 ] Call Me For Genuine Models W...

TrafficWave Generator Will Instantly drive targeted and engaging traffic back...

TrafficWave Generator Will Instantly drive targeted and engaging traffic back...

Digital Advertising Lecture for Advanced Digital & Social Media Strategy at U...

Digital Advertising Lecture for Advanced Digital & Social Media Strategy at U...

SAC 25 Final National, Regional & Local Angel Group Investing Insights 2024 0...

SAC 25 Final National, Regional & Local Angel Group Investing Insights 2024 0...

Sonagachi * best call girls in Kolkata | ₹,9500 Pay Cash 8005736733 Free Home...

Sonagachi * best call girls in Kolkata | ₹,9500 Pay Cash 8005736733 Free Home...

Top profile Call Girls In Indore [ 7014168258 ] Call Me For Genuine Models We...![Top profile Call Girls In Indore [ 7014168258 ] Call Me For Genuine Models We...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![Top profile Call Girls In Indore [ 7014168258 ] Call Me For Genuine Models We...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

Top profile Call Girls In Indore [ 7014168258 ] Call Me For Genuine Models We...

DATA SUMMIT 24 Building Real-Time Pipelines With FLaNK

DATA SUMMIT 24 Building Real-Time Pipelines With FLaNK

Statistics notes ,it includes mean to index numbers

Statistics notes ,it includes mean to index numbers

[한국어] Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games

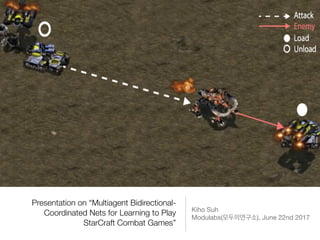

- 1. Presentation on “Multiagent Bidirectional- Coordinated Nets for Learning to Play StarCraft Combat Games” Kiho Suh Modulabs( ), June 22nd 2017

- 2. About Paper • Published on March 29th 2017 (v1) • Updated on June 20th 2017 (v3) • Alibaba, University College London • https://arxiv.org/pdf/ 1703.10069.pdf

- 3. Motivation • Single-Agent AI . (Atari, Baduk, Texas Hold’em ) • . Artificial General Intelligence ? • AI agent . • real-time strategy (RTS) game “StarCraft” . • play , “StarCraft” . • Parameter space joint learning approach .

- 4. ? agent

- 5. ? communication . communication protocol . : multi-agent bidirectionally-coordinated network (BiCNet) with a vectorized extension of actor- critic formulation

- 6. ? • agent BiCNet . • evaluation-decision-making process . • Parameter dynamic grouping . • AI agent . • label data BiCNet agent .

- 8. Related works • Jakob Foerster, Yannis M Assael, Nando de Freitas, and Shimon Whiteson. Learning to communicate with deep multi-agent reinforcement learning. NIPS 2016. • Sainbayar Sukhbaatar, Rob Fergus, et al. Learning multiagent communication with backpropagation. NIPS 2016.

- 9. Differentiable Inter-Agent Learning (Jakob Foerster et al. 2016) • agent agent Q RNN time-step transfer . • times-step agent transfer . • Agent agent , agent observation action .

- 10. Differentiable Inter-Agent Learning & Reinforced Inter-Agent Learning (Jakob Foerster et al. 2016) • (non-stationary environments) . • Starcraft real-trim strategy (RTS) .

- 11. CommNet (Sainbayar Sukhbaatar et al. 2016) • Multi-agent . • passing the averaged message over the agent modules between layers • fully symmetric, so lacks the ability of handle heterogeneous agent types

- 12. BiCNet

- 13. Stochastic Game of N agents and M opponents • S agent state space • Ai Controller agent i action space, i ∈ [1, N] • Bj enemy j action space, j ∈ [1, M] • T : S x A N x B M -> S environment deterministic transition function • Ri : S x A N x B M -> R agent/enemy i reward function, i ∈ [1, N+M] * agent( , ) action space .

- 14. Global Reward • Continuous action space to reduce the redundancy in modeling the large discrete action space • Reward shaping agent . • Global reward: agent reward .

- 15. Definition of Reward Function • Eq. (1) controlled agent . enemy global reward . controlled agent enemy 0 . zero-sum game! • . reward . (controlled agents) reduced health level for agent j (enemies)

- 16. Minimax Game • Controlled agent expected sum of discounted rewards policy . • Enemy joint policy expected sum . optimal action-state value function

- 17. Sampled historical state-action pairs (s, b) of the enemies • Minimax Q-learning . Eq. (2) Q-function modelling . • fictitious play( ) enemies policy bφ . - AI agent fictions play . Controlled agents( ) enemies player . Eq.(2) Q-function . - , supervised learning deterministic policy bφ . • Policy network sampled historical state-action pairs(s,b) .

- 18. Simpler MDP problem Enemies policy , Eq. (2) Stochastic Game MDP .

- 19. Eq. (1) • Eq. (1) global reward Eq. (1) zero- sum game local collaboration reward function team collaboration . • agent collaboration . • Eq. (1) agent local reward agent .

- 20. Extension of formulation of Eq. (1) • agent i top-K(i) • k reward. • agent top-K . • Eq (1) .

- 21. Bellman equation for agent i • N numbers, i ∈ {1, ..., N}

- 22. Objective as an expectation • action space model-free policy iteration . • Qi gradient policy vectorized version of deterministic policy gradient (DPG) .

- 23. Final Equation (Actor) • agents rewards gradient agent action backpropagate gradient parameter backpropagate .

- 24. Final Equation (Critic) • Off-policy deterministic actor-critic • critic: off-policy action-value function .

- 25. Actor-Critic networks • Ready to use SGD to compute the updates for both the actor and critic networks • backprop .

- 27. BiCNet • Bi-directional RNN actor-critic .

- 28. Design of the two networks • Parameter agent agent . agent . • agent training test agent . • bi-directional RNN agent . • Full dependency among agents because the gradients from all the actions in Eq. (9) are efficiently propagated through the entire networks • Not fully symmetric, and maintaining certain social conventions and roles by fixing the order of the agents that join the RNN. Solving any possible tie between multiple optimal joint actions

- 29. Experiments • BicNet agent built-AI . • . •

- 30. Experiments • easy combats - {3 Marines vs. 1 Super Zergling} - {3 Wraiths vs. 3 Mutalisks} • difficult combats - {5 Marines vs. 5 Marines} - {15 Marines vs. 16 Marines} - {20 Marines vs. 30 Zerglings} - {10 Marines vs. 13 Zerglings} - {15 Wraiths vs. 17 Wraiths} • heterogeneous combats - {2 Dropships and 2 Tanks vs. 1 Ultralisk} Marine Zergling Wraith Mutalisk Dropship Ultralisk Siege Tank all images are from http://starcraft.wikia.com/wiki/

- 31. Baselines • Independent controller (IND): agent . . • Fully-connected (FC): agent fully-connected. . • CommNet: agent multi-agent • GreedyMDP with Episodic Zero-Order Optimization (GMEZO): conducting collaborations through a greedy update over MDP agents, as well as adding episodic noises in the parameter space for explorations

- 32. Action space for each individual agent • 3 dimensional real vector • 1st dimension: ranging from -1 to 1 - Greater than or equal to 0, agent attacks - otherwise, agent moves • 2nd and 3rd dimension: degree and distance, collectively indicating the destination that the agent should move or attack from its current location

- 33. Training • Nadam optimizer • learning rate = 0.002 • 800 episodes (more than 700k steps)

- 34. Simple Experiment • tested on 100 independent games • skip frame: how many frames we should skip for controlling the agents actions • when batch_size is 32 (highest mean Q-value after 600k training steps) and skip_frame is 2 (highest mean Q-value after between 300k and 600k) has the highest winning rate.

- 35. Simple Experiment • Letting 4~6 agents work together as a group can efficiently control individual agents while maximizing damage output. • Fig 3, 4~5 as group size would help achieve best performance. • Fig 4, the convergence speed by plotting the winning rate against the number of training episodes.

- 36. Performance Comparison • BicNet is trained over 100k steps • measuring the performance as the average winning rate on 100 test games • when the number of agents goes beyond 10, the margin of performance between BiCNet and the second best starts to increase

- 37. Performance Comparison • “5M vs. 5M”, key factor to win is to “focus fire” on the weak. • As BicNet has built-in design for dynamic grouping, small number of agents (such as “5M vs. 5M”) does not suffice to show the advantages of BiCNet on large-scale collaborations. • For “5M vs. 5M”, BicNet only needs 10 combats before learning the idea of “focus fire,” achieving 85% win rate, whereas CommNet needs at least 50 episodes with a much lower winning rate

- 38. Visualization • “3 Marines vs. 1 Super Zergling” when the coordinated cover attack has been learned. • Collected values in the last hidden layer of the well-trained critic network over 10k steps. • t-SNE

- 39. Strategies to Experiment • Move without collision • task. . • Hit and run • task. . • Cover attack • task. . • Focus fire without overkill • task. . • Collaboration between heterogeneous agents • task. .

- 41. Coordinated moves without collision (3 Marines (ours) vs. 1 Super Zergling) • The agents move in a rather uncoordinated way, particularly, when two agents are close to each other, i.e. one agent may unintentionally block the other’s path. • After 40k steps in around 50 episodes, the number of collisions reduces dramatically.

- 42. Winning rate against difficult settings

- 43. Hit and Run tactics (3 Marines (ours) vs. 1 Zealot) Move agents away if under attack, and fight back when feel safe again.

- 44. Coordinated Cover Attack (4 Dragoons (ours) vs. 2 Ultralisks) • Let one agent draw fire or attention from the enemies. • At the meantime, other agents can take advantage of the time or distance gap to cause more harms.

- 45. Coordinated Cover Attack (3 Marines (ours) vs. 1 Zergling)

- 46. Focus fire without overkill (15 Marines (ours) vs. 16 Marines) • How to efficiently allocate the attacking resources becomes important. • The grouping design in the policy network serves as the key factor for BiCNet to learn “focus fire without overkill.” • Even with the decreasing of our unit number, each group can be dynamically resigned to make sure that the 3~5 units focus on attacking on same enemy.

- 47. Collaborations between heterogeneous agents (2 Dropships and 2 tanks vs. 1 Ultralisk) • A wide variety of types of units in Starcraft • Can be easily implemented in BicNet

- 48. Further to Investigate after this paper • Strong correlation between the specified reward and the learned policies • How the policies are communicated over the networks among agents • Whether there is a specific language that may have emerged • Nash Equilibrium when both sides are played by deep multi agent models.

- 49. – Youtube “ ” “ xx xx xx xx ” “ 20 …” !