Year 1 AI.ppt

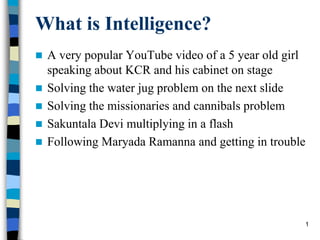

- 1. What is Intelligence? A very popular YouTube video of a 5 year old girl speaking about KCR and his cabinet on stage Solving the water jug problem on the next slide Solving the missionaries and cannibals problem Sakuntala Devi multiplying in a flash Following Maryada Ramanna and getting in trouble 1

- 2. 2 Example: Measuring problem! Problem: Using these three buckets, measure 7 liters of water. 3 l 5 l 9 l

- 3. 3 Missionaries and Cannibals: Initial State and Actions Initial state: – all missionaries, all cannibals, and the boat are on the left bank Goal state : – all missionaries, all cannibals are on the Right bank Conditions – Boat can carry at most 2 – Missionaries are in danger if cannibals outnumber them

- 4. Rabbits crossing a stream Three east-bound rabbits and three west-bound rabbits crossing a stream on stones. 4

- 5. 5 What is artificial intelligence? • There is no clear consensus on the definition of AI • Here’s one from John McCarthy, (He coined the phrase AI in 1956) - see http://www-formal.Stanford.edu/jmc/whatisai.html) Q. What is artificial intelligence? A. It is the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI does not have to confine itself to methods that are biologically observable. Q. Yes, but what is intelligence? A. Intelligence is the computational part of the ability to achieve goals in the world. Varying kinds and degrees of intelligence occur in people, many animals and some machines.

- 6. 6 Other possible AI definitions AI is a collection of hard problems which can be solved by humans and other living things, but for which we don’t have good algorithms for solving. – e. g., understanding spoken natural language, medical diagnosis, learning, self-adaptation, reasoning, chess playing, proving math theories, etc. Definition from R & N book: a program that – Acts like human (Turing test) – Thinks like human (human-like patterns of thinking steps) – Acts or thinks rationally (logically, correctly) Hofstadter: AI is whatever hasn’t been done yet.

- 7. 7 Other possible AI definitions Rich & knight: – The study of how to make programs/computers do things that people do better – The study of how to make computers solve problems which require knowledge and intelligence Luger & Stubblefield: – AI may be defined as the branch of computer science that is concerned with automation of intelligent behavior. Marvin Minsky – Artificial Intelligence is a science of how to persuade computers to exhibit such a type of behaviour that conventionally requires Human Intelligence

- 8. 8 AI Application Areas Game Playing Automated Reasoning and Theorem Proving Expert Systems Natural Language Understanding and Semantic Modeling Modeling Human Performance Planning and Robotics Machine Learning Neural Nets and Genetic Algorithms Fraud Detection

- 9. 9 Expert Systems(1) Expert systems are constructed by obtaining the knowledge of a human expert and coding it into a form that a computer may apply to similar problems. – domain expert provides the necessary knowledge of the problem domain. – knowledge engineer is responsible for implementing this knowledge in a program that is both effective and intelligent in its behavior.

- 10. 10 Expert Systems(2) Many successful expert systems – DENDRAL • designed to infer the structure of organic molecules from their chemical formulas and mass spectrographic information about the chemical bonds present in the molecules. • use the heuristic knowledge of expert chemists to search into the very large possible number of molecular structures. – MYCIN • used expert medical knowledge to diagnose and prescribe treatment for spinal meningitis and bacterial infections of the blood. • Provided clear and logical explanations of its reasoning, used a control structure appropriate to the specific problem domain, and identified criteria to reliably evaluate its performance.

- 11. 11 Expert Systems(3) Many successful expert systems (Continued) – PROSPECTOR • for determining the probable location and type of ore deposits based on geological information. – INTERNIST • for performing diagnosis in the area of internal medicine. – XCON • for configuring VAX computers.

- 12. 12 ELIZA Person: Men are all alike. ELIZA: In what way? Person: They’re always bugging us about something or other. ELIZA: Can you think of a specific example? Person: Well, my husband made me come here. ELIZA: Your husband made you come here. Person: He says I’m depressed much of the time. ELIZA: I am sorry to hear you are depressed. Person: It’s true. I am unhappy. ELIZA: Do you think coming here will help you not to be unhappy? Person: I need some help, that much seems certain. ELIZA: What would it mean to you if you got some help? Person: Perhaps I could learn to get along with my husband. ELIZA: Tell me more about your family.

- 13. 13 Turing Test Alan Turing (1912 - 1954) – Proposed a test - Turing’s Imitation Game – in his 1950 article Computing machinery and intelligence. Turing test – Computer and woman separated from an interrogator. – The interrogator types in a question to either party. – By observing responses, the interrogator’s goal was to identify which was the computer and which was the woman. – If he fails, the computer is intelligent! Interrogator Honest Woman Computer

- 14. 14 Turing Test – how good is it ? Measures imitation, not intelligence – Does Eliza pass this test ? YES! – Does Deep Blue pass this test ? NO! – Most AI programs are shallow, they recognize “syntax” but not “semantics” Searle’s Chinese Room – Room with a slot, human with huge rule book on how to translate Chinese to English – If someone drops a Chinese letter in the slot and the human translates it to English, does the human understand Chinese? Turing test is not reproducible, constructive, and amenable to mathematic analysis.

- 15. 15 Components of AI programs Knowledge Base – Facts – Rules Control Strategy – Which rule to apply Inference Mechanism – How to derive new knowledge from the existing information (follows from physical symbol system hypothesis)

- 16. 16 AI as Representation and Search In their Turing Award lecture, Newell and Simon argue that intelligent activity, in either humans or machines, is achieved thru 1. Symbol patterns to represent significant aspects of a problem domain, 2. Operations on these patterns to (combine and manipulate) generate potential solutions and 3. Search to select a solution from these possibilities. Physical Symbol System Hypothesis [NS’76]: – A physical symbol system has the necessary and sufficient means for general intelligent action.

- 17. 17 Physical Symbol System Hypothesis Physical Symbol System Hypothesis outlines the major foci of AI research 1. Defining symbol structures and operations necessary for intelligent problem solving and 2. Developing strategies to efficiently and correctly search potential solution generated by these structures and operations. These two interrelated issues of Knowledge Representation and Search are at the heart of AI. We study these two issues in detail in our course.

- 18. 18

- 19. 19 State Space Search State Space consists of 4 components 1. A set S of start (or initial) states 2. A set G of goal (or final) states 3. A set of nodes representing all possible states 4. A set of arcs connecting nodes representing possible actions in different states.

- 20. 20 Example: Measuring problem! (1 possible) Solution: a b c 0 0 0 3 0 0 0 0 3 3 0 3 0 0 6 3 0 6 0 3 6 3 3 6 1 5 6 0 5 7 3 l 5 l 9 l a b c Initial state Goal state

- 21. 21 Example: Measuring problem! Another Solution: a b c 0 0 0 0 5 0 3 2 0 3 0 2 3 5 2 3 0 7 3 l 5 l 9 l a b c

- 22. 22 Which solution do we prefer? • Solution 1: a b c 0 0 0 3 0 0 0 0 3 3 0 3 0 0 6 3 0 6 0 3 6 3 3 6 1 5 6 0 5 7 • Solution 2: a b c 0 0 0 0 5 0 3 2 0 3 0 2 3 5 2 3 0 7

- 23. 23 Missionaries and Cannibals: State Space 1c 1m 1c 2c 1c 2c 1c 2m 1m 1c 1m 1c 1c 2c 1m 2m 1c 2c 1c 1m

- 24. Rabbits crossing a stream EEE_WWW EE_EWWW EEWE_WW EEWEW_W EEW_WEW E_WEWEW _EWEWEW WE_EWEW https://www.youtube.com /watch?v=bwvBAt5LmM g&ab_channel=Anacron WEWE_EW WEWEWE_ WEWEW_E WEW_WEE W_WEWEE WW_EWEE WWWE_EE WWW_EEE 24

- 25. Problem solving by search Represent the problem as STATES and OPERATORS that transform one state into another state. A solution to the problem is an OPERATOR SEQUENCE that transforms the INITIAL STATE into a GOAL STATE. Finding the sequence requires SEARCHING the STATE SPACE by GENERATING the paths connecting the two. 25

- 26. Problem solving by search Run DFS BFS on the following graph. 26 A D B E C F G S 3 4 4 4 5 5 4 3 2

- 27. Problem solving by search Run DFS BFS on the following graph. 27 A D B E C F G S 3 4 4 4 5 5 4 3 2

- 28. Problem solving by search Important questions How much time? – Time complexity How much space? – Space complexity Will it always find a solution? – Completeness Will it always find the best solution? – Optimality 28

- 29. 29 Time complexity of BFS • If a goal node is found on depth d of the tree, all nodes up till that depth are created. G b d Thus: O(bd+1)

- 30. 30 In General: bd+1 Space complexity of BFS • Largest number of nodes in QUEUE is reached on the level d of the goal node. G b d

- 31. 31 Time complexity of DFS • In the worst case: • the goal node may be on the right-most branch, G d b Time complexity O(bd+1)

- 32. 32 Space complexity of DFS • Largest number of nodes in QUEUE is reached in bottom left-most node. ... Order: O(b*d)

- 33. 33 Evaluation of Depth-first & Breadth-first • Completeness: Is it guaranteed that a solution will be found? • Yes for BFS • No for DFS • Optimality: Is the best solution found when several solutions exist? • No for both BFS and DFS if edges are of different length • Yes for BFS and No for DFS if edges are of same length • Time complexity: How long does it take to find a solution? • Worst case: both exponential • Average case: DFS is better than BFS • Space complexity: How much memory is needed to perform a search? • Exponential for BFS • Linear for DFS

- 34. 34 Depth-first vs Breadth-first Use depth-first when – Space is restricted – High branching factor – There are no solutions with short paths – No infinite paths Use breadth-first when – Possible infinite paths – Some solutions have short paths – Low branching factor

- 35. 35 Depth-First Iterative Deepening (DFID) BF and DF both have exponential time complexity O(bd) BF is complete but has exponential space complexity DF has linear space complexity but is incomplete Space is often a harder resource constraint than time Can we have an algorithm that – is complete and – has linear space complexity ? DFID by Korf in 1987 First do DFS to depth 0 (i.e., treat start node as having no successors), then, if no solution found, do DFS to depth 1, etc. until solution found do DFS with depth bound c c = c+1

- 36. 36 Depth-First Iterative Deepening (DFID) Complete (iteratively generate all nodes up to depth d) Optimal if all operators have the same cost. Otherwise, not optimal but does guarantee finding solution of fewest edges (like BF). Linear space complexity: O(bd), (like DF) Time complexity is exponential O(bd), but a little worse than BFS or DFS because nodes near the top of the search tree are generated multiple times. Worst case time complexity is exponential for all blind search algorithms !

- 37. 37 Uniform/Lowest-Cost (UCS/LCFS) BFS, DFS, DFID do not take path cost into account. Let g(n) = cost of the path from the start node to an open node n Algorithm outline: – Always select from the OPEN the node with the least g(.) value for expansion, and put all newly generated nodes into OPEN – Nodes in OPEN are sorted by their g(.) values (in ascending order) – Terminate if a node selected for expansion is a goal Called “Dijkstra's Algorithm” in the algorithms literature and similar to “Branch and Bound Algorithm” in operations research literature

- 38. 38 UCS example A D B E C F G S 3 4 4 4 5 5 4 3 2 AFTER open closed ITERATION 0 [S(0)] [ ] 1 [A(3), D(4)] [S(0)] 2 [D(4), B(7)] [S(0), A(3)] 3 [E(6), B(7)] [S(0), A(3), D(4)] 4 [B(7), F(10)] [S(0), A(3), D(4), E(6)] 5 [F(10), C(11)] 6 [C(11), G(13)] 7 [G(13)]

- 39. 39

- 40. 40 Informed Search Blind (uninformed) search methods only have knowledge about the nodes that they have already explored. They have no knowledge about how far a node might be from a goal state. Heuristic (informed) search methods try to estimate the “distance” to a goal state. A heuristic function h(n) tries to guide the search process toward a goal state. – Use domain-specific information to select what is the best path to continue searching along. – Improves the efficiency of a search process possibly by sacrificing the claims of completeness/optimality. – It no longer guarantees to find the best answer but almost always finds a very good answer.

- 41. 41 Most Winning Lines x x x 3 lines 4 lines 2 lines Heuristic is … – Move to the board in which x has the most winning lines.

- 42. 42

- 43. 43 Adding Heuristic information to Blind search algorithms BFS + heuristics: Beam search DFS + heuristics: Hill-climbing Best-first search UCS + Best-first search: A-star

- 44. 44 Imagine the problem of finding a route on a road map and that the graph below is the road map: f(T) = the straight-line distance from T to G D E G S A B C F 4 6.7 10.4 11 8.9 6.9 3 The estimate can be wrong! D E G S A B C F 4 4 4 4 3 3 2 5 5

- 45. 45 Heuristic DFS Perform depth-first, BUT: instead of left-to- right selection, FIRST select the child with the best heuristic value S D A E B F G S A Example: using the straight-line distance: 8.9 10.4 10.4 6.9 6.7 3.0

- 46. 46 Best-First Search In HDFS, the best child is always chosen. In best-first search (BeFS), a best node in OPEN is always chosen. That’s, global best is chosen in BeFS while local best is chosen in HDFS. In BeFS, children are added first and then OPEN is sorted. BeFS is also called Greedy Search !

- 47. 47 Touring in Romania Oradea Bucharest Fagaras Pitesti Neamt Iasi Vaslui Urziceni Hirsova Eforie Giurgiu Craiova Rimnicu Vilcea Sibiu Dobreta Mehadia Lugoj Timisoara Arad Zerind 120 140 151 75 70 111 118 75 71 85 90 211 101 97 138 146 80 99 87 92 142 98 86

- 48. 48 Touring in Romania: Heuristic hSLD(n) = straight-line distance to Bucharest Arad 366 Hirsova 151 Rimnicu Vilcea 193 Bucharest 0 Iasi 226 Craiova 160 Lugoj 244 Sibiu 253 Dobreta 242 Mehadia 241 Timisoara 329 Eforie 161 Neamt 234 Urziceni 80 Fagaras 176 Oradea 380 Vaslui 199 Giurgiu 77 Pitesti 100 Zerind 374

- 49. 49 Arad 366 Hirsova 151 Rimnicu Vilcea 193 Bucharest 0 Iasi 226 Craiova 160 Lugoj 244 Sibiu 253 Dobreta 242 Mehadia 241 Timisoara 329 Eforie 161 Neamt 234 Urziceni 80 Fagaras 176 Oradea 380 Vaslui 199 Giurgiu 77 Pitesti 100 Zerind 374

- 50. 50 d = 3 Greedy Best-First Search: Touring Romania Arad (366) Rimnicu Vilcea (193) Fagaras (176) Oradea (380) Zerind (374) Sibiu (253) Timisoara (329) Arad (366) d = 0 d = 2 d = 1 d = 4 fringe selected Sibiu (253) Bucharest (0)

- 51. 51 BeFS + UCS = A* Best-first search can be mislead by the wrong Heuristic information. – What happens if the heuristic estimate of C is 6 ? – It isn't guaranteed to find a solution, even if one exists. – It doesn't always find the shortest path. Uniform cost search always find the shortest path, but inefficient (no use of info about goal). Combine BeFs and UCS to get A* ! Use f(n) = g(n) + h(n) to sort g(n) - The actual distance from start to state n. h(n) - The heuristic estimate of goal distance.

- 52. 52 A* Search: Touring Romania Arad (646) Rimnicu Vilcea (413) Fagaras (415) Oradea (671) Zerind (449) Sibiu (393) Timisoara (447) Arad (366) fringe selected Sibiu (591) Bucharest (450) Craiova (526) Pitesti (417) Sibiu (553) Bucharest (418) Craiova (615) Rimnicu Vilcea (607)

- 53. 53

- 54. Truth Tables Truth tables can be used to show how operators can combine propositions to compound propositions. P Q P PQ PQ PQ PQ F F T F F T T F T T T F T F T F F T F F F T T F T T T T 54

- 56. Propositional Logic (example) Wumpus World 1 2 3 4 1 2 3 4 Wumpus Pit Gold Gold Hunter Stench Breeze D start 56

- 57. 1 2 3 4 1 2 3 4 D Propositional Logic (example) 1 2 3 4 1 2 3 4 OK OK Wumpus World p - Pit w - Wumpus 57

- 58. 1 2 3 4 1 2 3 4 Propositional Logic (example) Wumpus World 1 2 3 4 1 2 3 4 OK p - Pit w - Wumpus p? p? 58

- 59. 1 2 3 4 1 2 3 4 Propositional Logic (example) Wumpus World 1 2 3 4 1 2 3 4 OK p - Pit w - Wumpus p? p? 59

- 60. 1 2 3 4 1 2 3 4 D Propositional Logic (example) Wumpus World 1 2 3 4 1 2 3 4 p - Pit w - Wumpus p! OK w! 60

- 61. 1 2 3 4 1 2 3 4 D Propositional Logic (example) p - Pit w - Wumpus Wumpus World 1 2 3 4 1 2 3 4 p! OK w! OK 61

- 62. 1 2 3 4 1 2 3 4 D Propositional Logic (example) p - Pit w - Wumpus Wumpus World 1 2 3 4 1 2 3 4 p! w! OK p? p? 62

- 63. 1) Modus Ponens on S1,1 and R1 W1,1 W1,2 W2,1 2) And-Elimination W1,1 W1,2 W2,1 3) Modus Ponens on S2,1 and R2 W1,1 W2,1 W2,2 W3,1 4) And-Elimination W1,1 W2,1 W2,2 W3,1 5) Modus Ponens on S1,2 and R3 W1,1 W1,2 W2,2 W1,3 6) Unit Resolution W1,2 W2,2 W1,3 7) Unit Resolution W2,2 W1,3 8) Unit Resolution W1,3 S1,1 S2,1 S1,2 B1,1 B2,1 B1,2 R1 : S1,1 W1,1 W1,2 W2,1 R2 : S2,1 W1,1 W2,1 W2,2 W3,1 R3 : S1,2 W1,1 W1,2 W2,2 W1,3 Propositional Logic (example) A Propositional logic KB Agent for the Wumpus World Goal: W1,3 63

- 64. A puzzle to solve In the back of an old cupboard you discover a note signed by a pirate famous for his bizarre sense of humor and love of logical puzzles. In the note he wrote that he had hidden treasure somewhere on the property. He listed the following five true statements and challenged the reader to use them to figure out the location of the treasure. Where is the treasure hidden? 64

- 65. A puzzle to solve… a. If this house is next to a lake, then the treasure is not in the kitchen. b. If the tree in the front yard is an elm, then the treasure is in the kitchen. c. This house is next to a lake. d. The tree in the front yard is an elm or the treasure is buried under the flagpole. e. If the tree in the back yard is an oak, then the treasure is in the garage. 65

- 66. Another puzzle to solve A detective has interviewed four witnesses to a crime. From the stories of the witnesses the detective has concluded that if the butler is telling the truth then so is the cook; the cook and the gardener cannot both be telling the truth; the gardener and the handyman are not both lying; and if the handyman is telling the truth then the cook is lying. For each of the four witnesses, can the detective determine whether that person is telling the truth or lying? 66

- 67. Another puzzle to solve… if the butler is telling the truth then so is the cook; the cook and the gardener cannot both be telling the truth; the gardener and the handyman are not both lying; if the handyman is telling the truth then the cook is lying. B C C G G H = (G H) H C 67

- 68. 68

- 70. What Is Machine Learning? “A field of study that gives computers the ability to learn without being explicitly programmed.” - Arthur Samuel, 1959 “A computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E.” – Tom Mitchell, 1997 ML is a prominent sub-field in AI, “the new electricity.” - Andrew Ng 70

- 71. What Is Machine Learning? “A field of study that gives computers the ability to learn without being explicitly programmed.” - Arthur Samuel, 1959 71 Traditional Computer Systems Rules Data Answers Programing Machine Learning Systems Data Answers Rules Training

- 72. Main types of machine learning Supervised learning – Classification – Regression Unsupervised learning – Clustering – Association rules Semi-supervised learning Reinforcement learning 72

- 73. Supervised learning Learner learns under some supervision – Mushroom picking – Expanding your business to other states All the data (examples) comes with labels We need to learn/fit a model that explains all the examples. Given a new instance, the model predicts the label 73

- 74. Supervised learning 74 This is a “cat”

- 75. Supervised learning Two main types – Classification – Regression 75

- 79. Classification Many algorithms Decision Trees and Random Forests Logistic Regression Support Vector Machines (SVMs) Naïve Bayesian classifier k-Nearest Neighbours Neural networks 79

- 80. 80 No. Risk History Debt Collateral income 1 high bad high none $0-15K 2 high unk high none $15-35K 3 mod unk low none $15-35K 4 high unk low none $0-15K 5 low unk low none over $35K 6 low unk low adequate over $35K 7 high bad low none $0 to 15K 8 mod bad low adequate over $35K 9 low good low none over $35K 10 low good high adequate over $35K 11 high good high none $0 to $15K 12 mod good high none $15 to $35K 13 low good high none over $35K 14 high bad high none $15 to $35K Decision Tree Learning

- 81. 81 A decision tree for credit risk assessment.

- 82. 82 Another decision tree for credit risk

- 83. Which tree prefered? Occam’s razor The simplest explanation is generally the best explanation A simpler tree can be expected to perform better on a test set A simpler tree avoids the overfitting problem 83

- 84. Support Vector Machines Support vector machines were invented by V. Vapnik and his co-workers in 1970s in Russia and became known to the West in 1992. SVMs are linear classifiers that find a hyperplane to separate two class of data, positive and negative. Kernel functions are used for nonlinear separation. SVM not only has a rigorous theoretical foundation, but also performs classification more accurately than most other methods in applications, especially for high dimensional data. It is perhaps the best classifier for text classification.

- 85. Support Vector Machines Which of the linear separators is optimal?

- 87. Nonlinear SVMs What if the data is linearly inseparable? Can we apply some mathematical trick? 0 x x y

- 88. Nonlinear SVMs What if the data is linearly inseparable? Can we apply some mathematical trick? 0 0 x2 x x x2 y2

- 89. Nonlinear SVMs General idea: the original input space can always be mapped to some higher-dimensional feature space where the training set is separable: Φ: x → φ(x) )] , 2 , [ ] , [ : R R : 2 2 2 1 2 1 2 1 3 2 x x x x x x

- 90. Bayes Classification P(h|D) = P(D|h) P(h) / P(D) Posterior probability 𝑃(𝑦k│𝐱) is calculated from 𝑃(𝐱|𝑦𝑞) and 𝑃(𝑦𝑞) using Bayes theorem. Learner wants to find the most probable class 𝑘 given the observed x. Any such maximally probable class is called a Maximum A Posteriori (MAP) class determined by 𝑦MAP = argmax𝑞 𝑃(𝑦𝑞│𝐱) = argmax𝑞 𝑃(𝐱|𝑦𝑞) 𝑃(𝑦𝑞) / 𝑃(𝐱) = argmax𝑞 𝑃(𝐱|𝑦𝑞) 𝑃(𝑦𝑞) 90

- 91. Naive Bayes Classifier Naïve Bayes assumption: conditional independence P(<𝑥1, 𝑥2,…, 𝑥n>| 𝑦𝑞) = P𝑗 P(𝑥𝑗 | 𝑦𝑞) 𝑦NB = argmax 𝑃(𝑦𝑞) P𝑗 P(𝑥𝑗 | 𝑦𝑞) With conditional independence, the MAP classifier becomes the Naïve Bayes classifier. Is it a reasonable assumption? 91

- 92. Naive Bayes Classifier - Example Test example A = m and B=q. P(C=t) =1/2, P(C=f) =1/2 P(A=m|C=t)=2/5 P(A=m|C=f)=1/5 P(B=q|C=t)=2/5 P(B=q|C=f)=2/5 P(C=t)P(x|C=t)=1/2*2/5*2/5=2/25. P(C=f)P(x|C=t)=1/2*1/5*2/5=1/25. Therefore, C=t is the prediction. 92

- 93. 93 K-NN classifier Key idea: just store all training examples <xi,f(xi)> Nearest neighbor: Given a query instance xq, first locate nearest training example xn, then estimate f(xq)=f(xn) K-nearest neighbor: Given xq, take vote among its k nearest neighbors (if discrete-valued target function) Take mean of f values of k nearest neighbors (if real- valued) f(xq)=i=1 k f(xi)/k

- 94. Regression Relation between variables where changes in some variables may “explain” or possibly “cause” changes in other variables. Explanatory variables are termed the independent variables and the variables to be explained are termed the dependent variables. Regression model estimates the nature of the relationship between the independent and dependent variables. – How much should you pay for your new place?

- 95. Regression We want to find the best line (linear function y=f(X)) to explain the data. 95 X y

- 97. Polynomial Regression What if your data is more complex than a straight line? Surprisingly, you can use a linear model to fit nonlinear data. A simple way to do this is to add powers of each feature as new features, then train a linear model on this extended set of features. 97

- 98. Example 98

- 103. Bias-Variance Trade Off There is a natural trade-off between bias and variance. Procedures with increased flexibility to adopt to the training data tend to have lower bias but higher variance. Simpler (inflexible; less number of parameters) models tend to have higher bias but lower variance. To minimize the overall mean-square-error, we need a hypothesis that results in low bias and low variance. This is known as bias-variance dilemma or bias-variance trade-off. 103

- 107. Ensemble learning Reality 1 2 3 4 5 Combine X X X X X X X X X X X X X 107

- 108. Unsupervised Learning Unsupervised/Undirected Learning 𝑦(𝑖) - output not available 𝐱(𝑖) - set of feature vectors Goal: Unravel the underlying similarities in the data and make groups or clusters based on feature vector. Clustering Association rules 108

- 109. Clustering Clustering is a technique for finding similarity groups in data, called clusters. I.e., – it groups data instances that are similar to (near) each other in one cluster and data instances that are very different (far away) from each other into different clusters. 109

- 110. Clustering Example 1: groups people of similar sizes together to make “small”, “medium” and “large” T-Shirts. – Tailor-made for each person: too expensive – One-size-fits-all: does not fit all. Example 2: In marketing, segment customers according to their similarities – To do targeted marketing. Example 3: Given a collection of text documents, we want to organize them according to their content similarities, – To produce a topic hierarchy 110

- 111. K-Means Clustering Best described with a Voronoi diagram AKA Lloyd-Forgy algorithm Developed in 1957 by Lloyd (copyrighted at Bell labs, published in 1982) Developed independently in 1965 by Forgy In 2006, Arthur and Vassilvitskii introduced K- Means++, a faster way of identifying centroids and is the default in Kmeans class. There are a couple of other varieties such as Mini batch K Means. 111

- 112. K-Means Clustering from sklearn.cluster import KMeans kmeans = KMeans(n_clusters=5) y_pred = kmeans.fit_predict(X) 112

- 113. K-Means Algorithm 113 Assign each instance to the cluster whose centroid is the closest. Update the centroid of each cluster.

- 115. K-Means Clustering K-Means algorithm is sensitive to initial seeds. 115

- 116. K-Means Clustering K-Means algorithm is sensitive to initial seeds. 116

- 117. Association Rule Learning Association rules discover association relationships or correlation among a set of items. Market basket transactions: – t1: {bread, cheese, milk} – t2: {apple, eggs, salt, yogurt} – … … – tn: {biscuit, eggs, milk} Widely applied in recommender systems. 117

- 118. 118 Reinforcement Learning The reinforcement learning (RL) problem is the problem faced by an agent that learns behavior through trial-and- error interactions with its environment. It consists of an agent that exists in an environment described by a set S of possible states, a set A of possible actions, and a reward (or punishment) rt that the agent receives each time t after it takes an action in a state. (Alternatively, the reward might not occur until after a sequence of actions have been taken.) The objective of an RL agent is to maximize its cumulative reward received over its lifetime.

- 119. 119 Reinforcement Learning Problem Agent Environment state st st+1 rt+1 reward rt action at s0 a0 r1 s1 a1 r2 s2 a2 r3 Goal: Learn to choose actions at that maximize future rewards r1+ r2+ 2 r3+…, where 0<<1 is a discount factor s3

- 120. 120 Reinforcement Learning Building a learning robot/agent – sensors observing state – set of actions to modify state – task: learn a control strategy (policy) • what action a to take in state s to achieve goal • goals defined by a reward function giving a numeric value for each action Two significant attributes of RL – Trial and error search – Cumulative reward TD-Gammon – trained by 1.5 million games against itself – equal to best human players

- 121. Semi-Supervised Learning Using Clustering for Semi-Supervised Learning Another use case for clustering is in semi- supervised learning, when we have plenty of unlabeled instances and very few labeled instances. Since it is often costly to label instances, especially when it has to be done manually by experts, it is a good idea to label representative instances rather than just random instances. – Manufacturing example. 121

- 122. Semi-Supervised Learning Let’s train a Logistic Regression model on the first 50 labeled instances from the digits dataset: n_labeled = 50 log_reg = LogisticRegression() log_reg.fit(X_train[:n_labeled], y_train[:n_labeled]) What is the performance of this on the test set? >>> log_reg.score(X_test, y_test) 0.8333333333333334 Low accuracy is due to the fact, we only trained on a very small sample of 50 instances. 122

- 123. Semi-Supervised Learning Let’s see how can improve it. Let’s cluster the training set into 50 clusters and find the image closest to the centroid. We will call these images the representative images. kmeans = KMeans(n_clusters=50) X_digits_dist = kmeans.fit_transform(X_train) representative_digit_idx = np.argmin(X_digits_dist, axis=0) X_representative_digits = X_train[representative_digit_idx] y_representative_digits = np.array([4, 8, 0, 6, 8, 3, ..., 7, 6, 2, 3, 1, 1]) 123

- 124. Semi-Supervised Learning Now we have a dataset with just 50 labeled instances, but instead of being random instances, each of them is a representative image of its cluster. Let’s see if the performance is any better: >>> log_reg = LogisticRegression() >>> log_reg.fit(X_representative_digits, y_representative_digits) >>> log_reg.score(X_test, y_test) 0.9222222222222223 We jumped from 83.3% accuracy to 92.2%, although we are still only training the model on 50 instances. 124

- 125. Semi-Supervised Learning We can do better if we propagated the labels to all the other instances in the same cluster. This is called label propagation. y_train_propagated = np.empty(len(X_train), dtype=np.int32) for i in range(k): y_train_propagated[kmeans.labels_==i] = y_representative_digits[i] Let us train again and see its performance. >>> log_reg = LogisticRegression() >>> log_reg.fit(X_train, y_train_propagated) >>> log_reg.score(X_test, y_test) 0.9333333333333333 125

- 126. Semi-Supervised Learning We propagated each representative instance’s label to all the instances in the same cluster, including the ones on the boundaries. Let’s see what happens if we only propagate the labels to the 20% instances near the centroids. >>> log_reg = LogisticRegression() >>> log_reg.fit(X_train_partially_propagated, y_train_partially_propagated) >>> log_reg.score(X_test, y_test) 0.94 The propagated labels are pretty good. >>> np.mean(y_train_partially_propagated == y_train[partially_propagated]) 0.9896907216494846 126

- 127. Active Learning To continue improving your model and your training set, the next step could be to do a few rounds of active learning, which is when a human expert interacts with the learning algorithm, providing labels for specific instances when the algorithm requests them. There are many different strategies for active learning, but one of the most common ones is called uncertainty sampling. 127

- 128. Active Learning Uncertainty sampling works as follows: 1. The model is trained on the labeled instances gathered so far, and this model is used to make predictions on all the unlabeled instances. 2. The instances for which the model is most uncertain (i.e., when its estimated probability is lowest) are given to the expert to be labeled. 3. You iterate this process until the performance improvement stops being worth the labeling effort. 128

- 129. Active Learning Other strategies include labeling the instances that would result in the largest model change, or the largest drop in the model’s validation error, or the instances that different models disagree on. 129

- 130. Deep Learning 130 More layers can encapsulate more knowledge More weights to train – need more data, need more computation

- 131. History of Deep Learning 131

- 132. The game changer 2012 The AlexNet CNN architecture11 won the 2012 ImageNet challenge by a large margin: it achieved a an error rate of 17%, while the second best achieved only 26%! It was developed by Alex Krizhevsky (hence the name), Ilya Sutskever, and Geoffrey Hinton. It is similar to LeNet-5, only much larger and deeper, and it was the first to stack convolutional layers directly on top of one another, instead of stacking a pooling layer on top of each convolutional layer. 132

- 133. The game changer 2012 133

- 134. Deep learning all the way Deep learning has found applications in many types of problems, such as natural-language processing. It has completely replaced SVMs and decision trees in a wide range of applications. European Organization for Nuclear Research, CERN, used decision tree–based methods for several years; but CERN eventually switched to Keras-based deep neural networks due to their higher performance and ease of training on large datasets. 134

- 135. Deep learning all the way Deep learning became popular because 1. They perform much better than traditional ML. 2. Deep learning made problem-solving much easier, because it completely automates what used to be the most crucial step in a machine-learning workflow: feature engineering. 3. Deep learning facilitated incremental, layer-by-layer way in which increasingly complex representations are developed. 4. Furthermore, these intermediate incremental representations are learned jointly. 5. They came out at the right time with lots of data and lots of computing power! 135