Lucene Indexing Guide: Understanding Indexing, Queries, Boosting and More

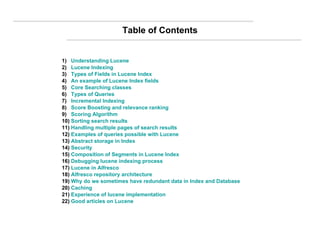

- 1. Table of Contents 1) Understanding Lucene 2) Lucene Indexing 3) Types of Fields in Lucene Index 4) An example of Lucene Index fields 5) Core Searching classes 6) Types of Queries 7) Incremental Indexing 8) Score Boosting and relevance ranking 9) Scoring Algorithm 10) Sorting search results 11) Handling multiple pages of search results 12) Examples of queries possible with Lucene 13) Abstract storage in Index 14) Security 15) Composition of Segments in Lucene Index 16) Debugging lucene indexing process 17) Lucene in Alfresco 18) Alfresco repository architecture 19) Why do we sometimes have redundant data in Index and Database 20) Caching 21) Experience of lucene implementation 22) Good articles on Lucene

- 3. Lucene doesn’t care about the source of the data, its format, or even its language, as long as you can convert it to text. This means you can use Lucene to index and search data stored in files: web pages on remote web servers, documents stored in local file systems, in databases, simple text files, Microsoft Word documents, HTML or PDF files, or any other format from which you can extract textual information. The quality of a search is typically described using precision and recall metrics. Recall measures how well the search system finds relevant documents, whereas precision measures how well the system filters out the irrelevant documents. Understanding Lucene Back to Content page

- 4. As you saw in our Indexer class, you need the following classes to perform the simplest indexing procedure: ■ IndexWriter (creates a new index and adds documents to an existing index) ■ Directory (represents the location of a Lucene index. Subclasses : FSDirectory and RAMDirectory ) ■ Analyzer (extracts tokens out of text to be indexed and eliminates the rest) ■ Document (a collection of fields ) ■ Field ( Each field corresponds to a piece of data that is either queried against or retrieved from the index during search) Lucene Indexing Back to Content page

- 6. An example of Lucene Index fields Back to Content page

- 7. Core Searching classes ■ IndexSearcher ■ Term (basic unit for searching, consists of the name of the field and the value of that field) ■ Query (subclasses : TermQuery, BooleanQuery, PhraseQuery, PrefixQuery, PhrasePrefixQuery, RangeQuery,FilteredQuery, and SpanQuery.) ■ TermQuery (primitive query types) ■ Hits (simple container of pointers to ranked search results) Back to Content page

- 8. TermQuery s are especially useful for retrieving documents by a key. A TermQuery is returned from QueryParser if the expression consists of a single word. PrefixQuery matches documents containing terms beginning with a specified string. QueryParser creates a PrefixQuery for a term when it ends with an asterisk (*) in query expressions. RangeQuery facilitates searches from a starting term through an ending term. RangeQuery query = new RangeQuery(begin, end, true ); BooleanQuery The various query types discussed here can be combined in complex ways using BooleanQuery. BooleanQuery itself is a container of Boolean clauses . A clause is a subquery that can be optional, required, or prohibited. These attributes allow for logical AND, OR, and NOT combinations. You add a clause to a BooleanQuery using this API method: public void add(Query query, boolean required, boolean prohibited) PhraseQuery An index contains positional information of terms. PhraseQuery uses this information to locate documents where terms are within a certain distance of one another. FuzzyQuery matches terms similar to a specified term. Types of Queries Back to Content page

- 13. By default, all Documents have no boost—or, rather, they all have the same boost factor of 1.0. By changing a Document’s boost factor, you can instruct Lucene to consider it more or less important with respect to other Documents in the index. The API for doing this consists of a single method, setBoost(float), which can be used as follows: doc.setBoost(1.5); writer.addDocument(doc); When you boost a Document, Lucene internally uses the same boost factor to boost each of its Fields. To give field boost : subjectField.setBoost(1.2); The boost factor values you should use depend on what you’re trying to achieve; you may need to do a bit of experimentation and tuning to achieve the desired effect . It’s worth noting that shorter Fields have an implicit boost associated with them, due to the way Lucene’s scoring algorithm works. Boosting is, in general, an advanced feature that many applications can work very well without. Document and Field boosting comes into play at search time. Lucene’s search results are ranked according to how closely each Document matches the query, and each matching Document is assigned a score. Lucene’s scoring formula consists of a number of factors, and the boost factor is one of them. Boosting Documents and Fields

- 14. Relevancy scoring mechanism Source : http://infotrieve.com/products_services/databases/LSRC_CST.pdf The formula used by lucene to calculate the rank of a document

- 16. The list of the fields to which boost was added with an explanation as to why. Quoted directly from ServerSide.com : “ The date boost has been really important for us”. We have data that goes back for a long time, and seemed to be returning “old reports” too often. The date-based booster trick has gotten around this, allowing for the newest content to bubble up . The end result is that we now have a nice simple design which allows us to add new sources to our index with minimal development time! How ServerSide.com used boost to solve it’s problem Source : http://www.theserverside.com/tt/articles/article.tss?l=ILoveLucene Back to Content page

- 17. Scoring Algorithm Back to Content page

- 19. Now that the Hits object has been initialized, it begins the process of identifying documents that match the query by calling getMoreDocs method. Assuming we are not sorting (since sorting doesn't effect the raw Lucene score), we call on the "expert" search method of the Searcher, passing in our Weight object, Filter and the number of results we want.This method returns a TopDocs object, which is an internal collection of search results. The Searcher creates a TopDocCollector and passes it along with the Weight, Filter to another expert search method (for more on the HitCollector mechanism, see Searcher .) The TopDocCollector uses a PriorityQueue to collect the top results for the search. If a Filter is being used, some initial setup is done to determine which docs to include. Otherwise, we ask the Weight for a Scorer for the IndexReader of the current searcher and we proceed by calling the score method on the Scorer . At last, we are actually going to score some documents. The score method takes in the HitCollector (most likely the TopDocCollector) and does its business. Of course, here is where things get involved. The Scorer that is returned by the Weight object depends on what type of Query was submitted. In most real world applications with multiple query terms, the Scorer is going to be a BooleanScorer2. Assuming a BooleanScorer2 scorer, we first initialize the Coordinator , which is used to apply the coord() factor. We then get a internal Scorer based on the required, optional and prohibited parts of the query. Using this internal Scorer, the BooleanScorer2 then proceeds into a while loop based on the Scorer#next() method. The next() method advances to the next document matching the query. This is an abstract method in the Scorer class and is thus overriden by all derived implementations. If you have a simple OR query your internal Scorer is most likely a DisjunctionSumScorer , which essentially combines the scorers from the sub scorers of the OR'd terms. Scoring Algorithm Back to Content page

- 20. Sorting comes at the expense of resources. More memory is needed to keep the fields used for sorting available. For numeric types, each field being sorted for each document in the index requires that four bytes be cached. For String types, each unique term is also cached for each document. Only the actual fields used for sorting are cached in this manner. We need to plan our system resources accordingly if we want to use the sorting capabilities, knowing that sorting by a String is the most expensive type in terms of resources. Sorting search results

- 23. Handling of various types of queries by the QueryParser Back to Content page

- 25. A security filter is a powerful example, allowing users to only see search results of documents they own even if their query technically matches other documents that are off limits. An example of document filtering constrains documents with security in mind. Our example assumes documents are associated with an owner, which is known at indexing time. We index two documents; both have the term info in their keywords field, but each document has a different owner: public class SecurityFilterTest extends TestCase { private RAMDirectory directory; protected void setUp() throws Exception { IndexWriter writer = new IndexWriter(directory, new WhitespaceAnalyzer(), true); // Elwood Document document = new Document(); document.add( Field.Keyword("owner", "elwood") ); document.add(Field.Text("keywords", "elwoods sensitive info")); writer.addDocument(document); // Jake document = new Document(); document.add( Field.Keyword("owner", "jake") ); document.add(Field.Text("keywords", "jakes sensitive info")); writer.addDocument(document); writer.close(); } } Security Source : Pg 211 from Lucene in action Back to Content page

- 26. Suppose, though, that Jake is using the search feature in our application, and only documents he owns should be searchable by him. Quite elegantly, we can easily use a QueryFilter to constrain the search space to only documents he is the owner of, as shown in listing 5.7. public void testSecurityFilter() throws Exception { directory = new RAMDirectory(); setUp(); TermQuery query = new TermQuery(new Term("keywords", "info")) ; IndexSearcher searcher = new IndexSearcher(directory); Hits hits = searcher.search(query); assertEquals("Both documents match", 2, hits.length()); QueryFilter jakeFilter = new QueryFilter( new TermQuery(new Term("owner", "jake"))); hits = searcher.search(query, jakeFilter); assertEquals(1, hits.length()); assertEquals("elwood is safe", "jakes sensitive info", hits.doc(0).get("keywords")); } For using this approach we will have a field in the Index called owner. Security Back to Content page

- 27. You can constrain a query to a subset of documents another way, by combining the constraining query to the original query as a required clause of a BooleanQuery. There are a couple of important differences, despite the fact that the same documents are returned from both. QueryFilter caches the set of documents allowed, probably speeding up successive searches using the same instance. In addition, normalized Hits scores are unlikely to be the same. The score difference makes sense when you’re looking at the scoring formula (see section 3.3, page 78). The IDF factor may be dramatically different. When you’re using BooleanQuery aggregation, all documents containing the terms are factored into the equation, whereas a filter reduces the documents under consideration and impacts the inverse document frequency factor. Security Back to Content page

- 28. Each segment index maintains the following: Field names . This contains the set of field names used in the index. Stored Field values . This contains, for each document, a list of attribute-value pairs, where the attributes are field names. These are used to store auxiliary information about the document, such as its title, url, or an identifier to access a database. The set of stored fields are what is returned for each hit when searching. This is keyed by document number. Term dictionary . A dictionary containing all of the terms used in all of the indexed fields of all of the documents. The dictionary also contains the number of documents which contain the term, and pointers to the term's frequency and proximity data. Term Frequency data . For each term in the dictionary, the numbers of all the documents that contain that term, and the frequency of the term in that document. Term Proximity data . For each term in the dictionary, the positions that the term occurs in each document. Normalization factors . For each field in each document, a value is stored that is multiplied into the score for hits on that field. Term Vectors . For each field in each document, the term vector (sometimes called document vector) may be stored. A term vector consists of term text and term frequency. To add Term Vectors to your index see the Field constructors Deleted documents . An optional file indicating which documents are deleted. Composition of Segments in Lucene Index Back to Content page

- 29. We can get Lucene to output information about its indexing operations by setting Index-Writer’s public instance variable infoStream to one of the OutputStreams, such as System.out IndexWriter writer = new IndexWriter(dir, new SimpleAnalyzer(), true); writer.infoStream = System.out; Debugging lucene indexing process Back to Content page

- 30. Lucene In Alfresco There are three possible approaches we can follow. 1) Let alfresco do the indexing, use its implementation of the search, use the search results it returns and load it into our page. 2) Let Alfresco do the indexing and directly access its indexes to get query results 3) Let alfresco only do the content management, and we take care of both the indexing and the searching Back to Content page

- 35. The database can redundantly keeps some of the information that can be found in the Lucene index for two specific reasons: ■ Failure recovery —If the index somehow becomes corrupted (for example, through disk failure), it can easily and quickly be rebuilt from the data stored in the database without any information loss. This is further leveraged by the fact that the database can reside on a different machine. ■ Access speed —Each document is marked with a unique identifier. So, in the case that the application needs to access a certain document by a given identifier, the database can return it more efficiently than Lucene could. (the identifier is the primary key of a document in the database). If we would employ Lucene here, it would have to search its whole index for the document with the identifier stored in one of the document’s fields. Why do we sometimes have redundant data in Index and Database Back to Content page

- 36. If we are unable to get access to Alfresco’s indexing and scoring process then we possibly add boost to the query itself. It is still not confirmed whether it will work first of all, and if it works, whether it will work fast enough. “ Title:Lucene”^4 OR “Keywords:Lucene”^3 OR “Contents:Lucene”^1 A possible approach to improve hit relevancy in Alfresco Back to Content page

- 37. Lucene has an internal caching mechanism in case of filters. Lucene does come with a simple cache mechanism, if you use Lucene Filters . The classes to look at are CachingWrapperFilter and QueryFilter . For example lets say we wanted to let users search JUST on the last 30 days worth of content. We could run the filter ONCE and then cache it with the Term clause used to run the query. Then we could just use the same filter again for every user until you have to optimize() the index again. As long as the document numbers stay they same we don't have much more to do. But this will probably not be of much use to us, since we will need to optimize the index often. Caching mechanism Back to Content page

- 38. Caching mechanism List of Top Keywords Top searched keywords obtained from logs logs Lucene Index Top Keyword results cache Searcher (Query) Results UI Searcher checks if query matches top keywords If query term matches one of the cached keywords then results are fetched from cache If query term doesn’t match one of the cached keywords then search in the Index Top keywords are searched for in the index and cached beforehand Cache expiring and refreshing mechanism ( including regular updating of top keywords list ) Back to Content page

- 39. Question : “ I gave parallelMultiSearcher a try and it was significantly slower than simply iterating through the indexes one at a time. Our new plan is to somehow have only one index per search machine and a larger main index stored on the master. What I'm interested to know is whether having one extremely large index for the master then splitting the index into several smaller indexes (if this is possible) would be better than having several smaller indexes and merging them on the search machines into one index. I would also be interested to know how others have divided up search work across a cluster.” Answer : “ I'm responsible for the webshots.com search index and we've had very good results with lucene. It currently indexes over 100 Million documents and performs 4 Million searches / day. We initially tested running multiple small copies and using a MultiSearcher and then merging results as compared to running a very large single index. We actually found that the single large instance performed better. To improve load handling we clustered multiple identical copies together, then session bind a user to particular server and cache the results, but each server is running a single index. Our index is currently about 40Gb. The advantage of binding a user is that once a search is performed then caching within lucene and in the application is very effective if subsequent searches go back to the same box. Our initial searches are usually in the sub 100milliS range while subsequent requests for deeper pages in the search are returned instantly.” Experience of lucene implementation @ webshots.com Back to Content page

- 41. Good Articles on Lucene http://www.theserverside.com/tt/articles/article.tss?l=ILoveLucene http://www.javaworld.com/javaworld/jw-09-2000/jw-0915-lucene.html?page=1 http://technology.amis.nl/blog/?p=1288 http://powerbuilder.sys-con.com/read/42488.htm http://www-128.ibm.com/developerworks/library/wa-lucene2/ Spell Checking : http://today.java.net/pub/a/today/2005/08/09/didyoumean.html Lucene integration with hibernate: http://www.hibernate.org/hib_docs/search/reference/en/html_single/ Lucene with Spring : http://technology.amis.nl/blog/?p=1248 It talks about spring modules. Back to Content page