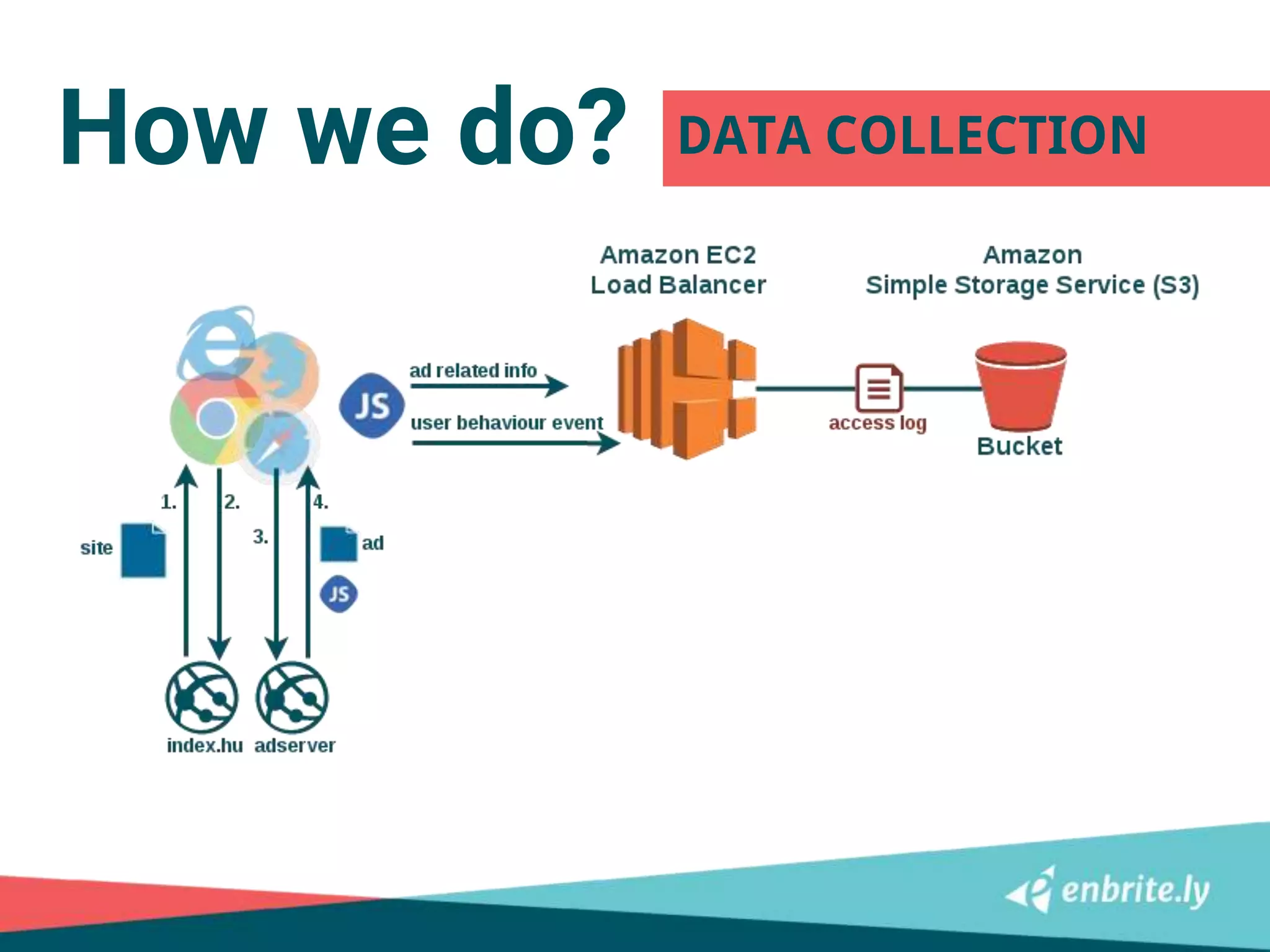

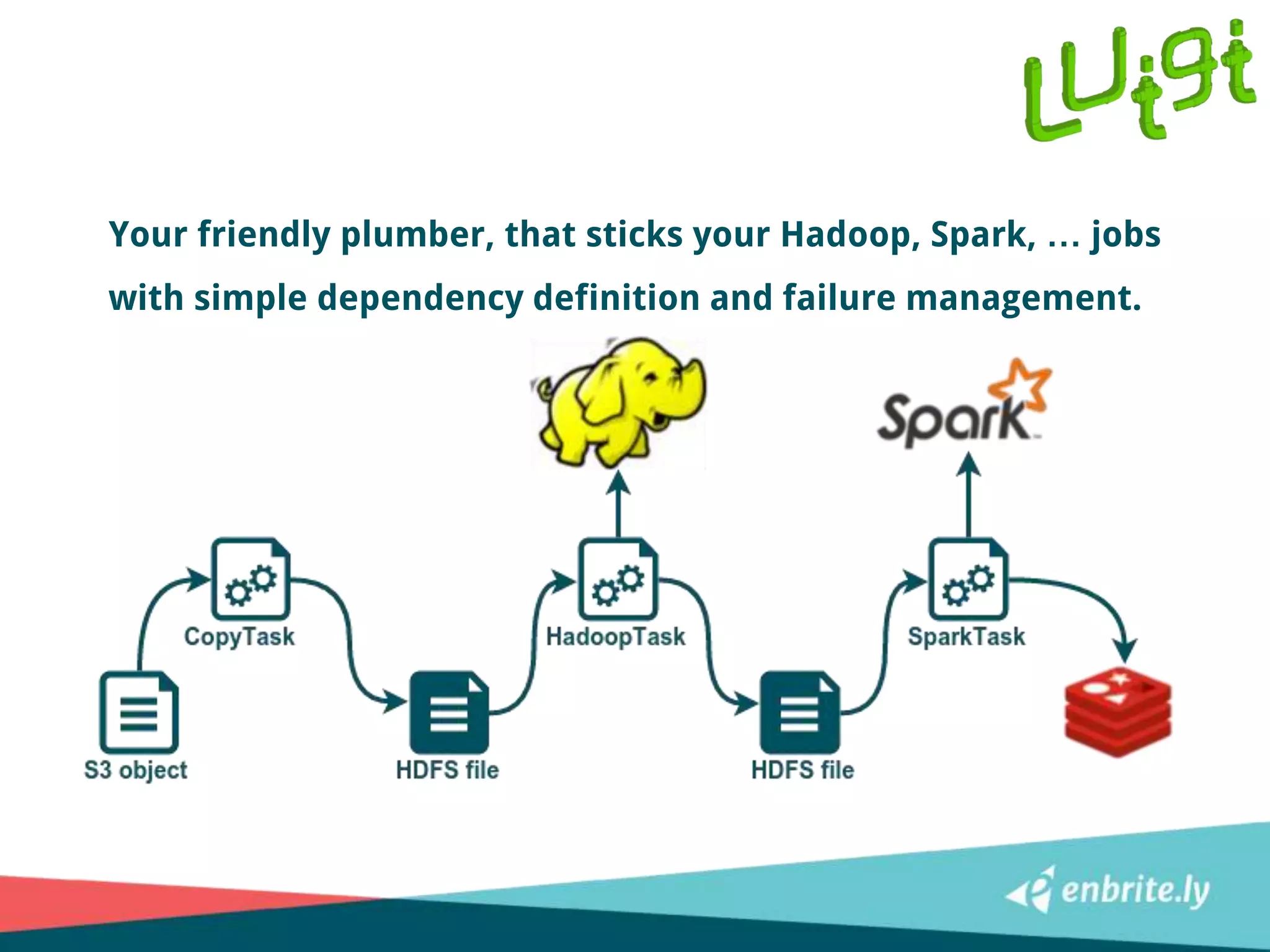

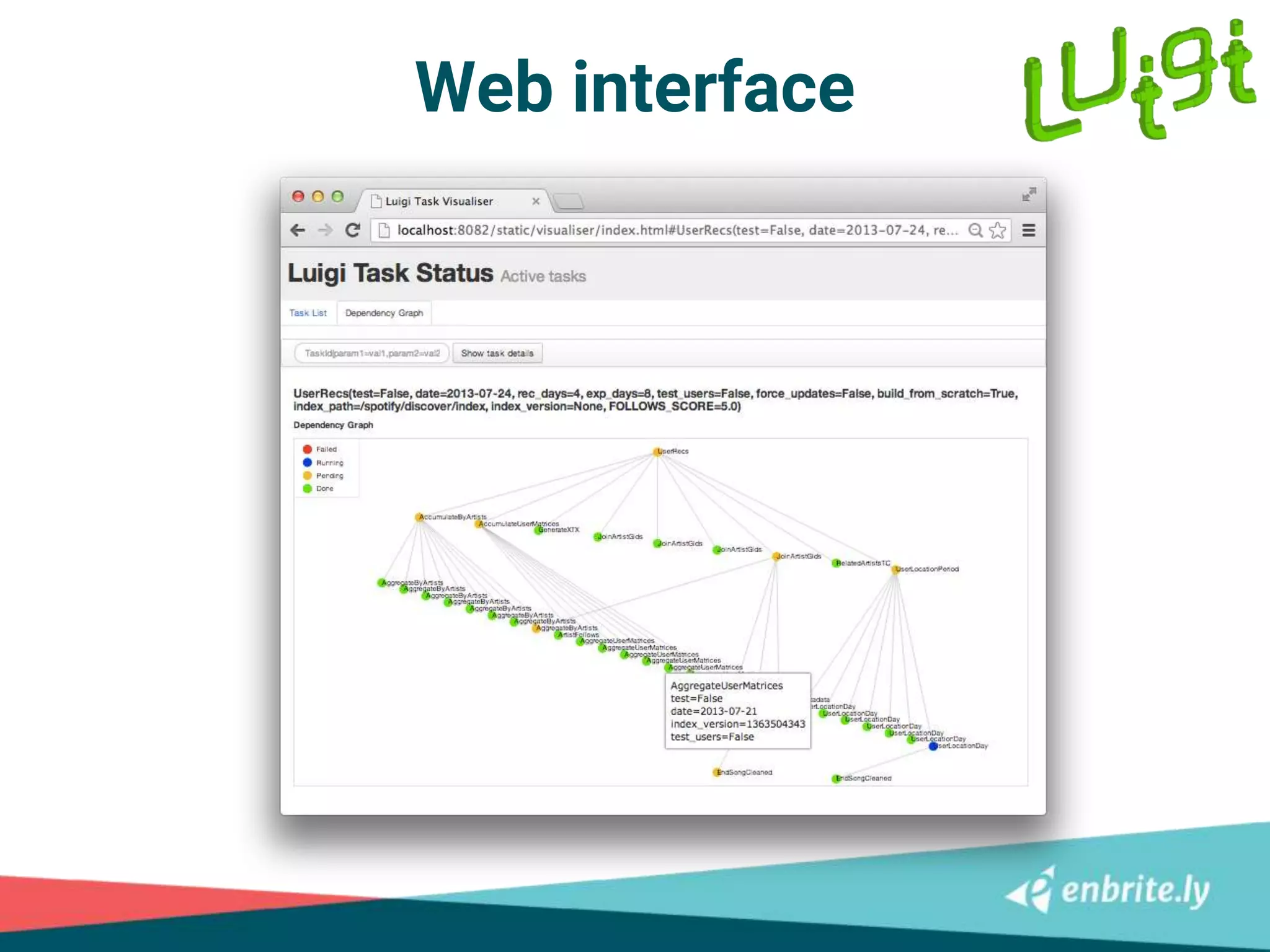

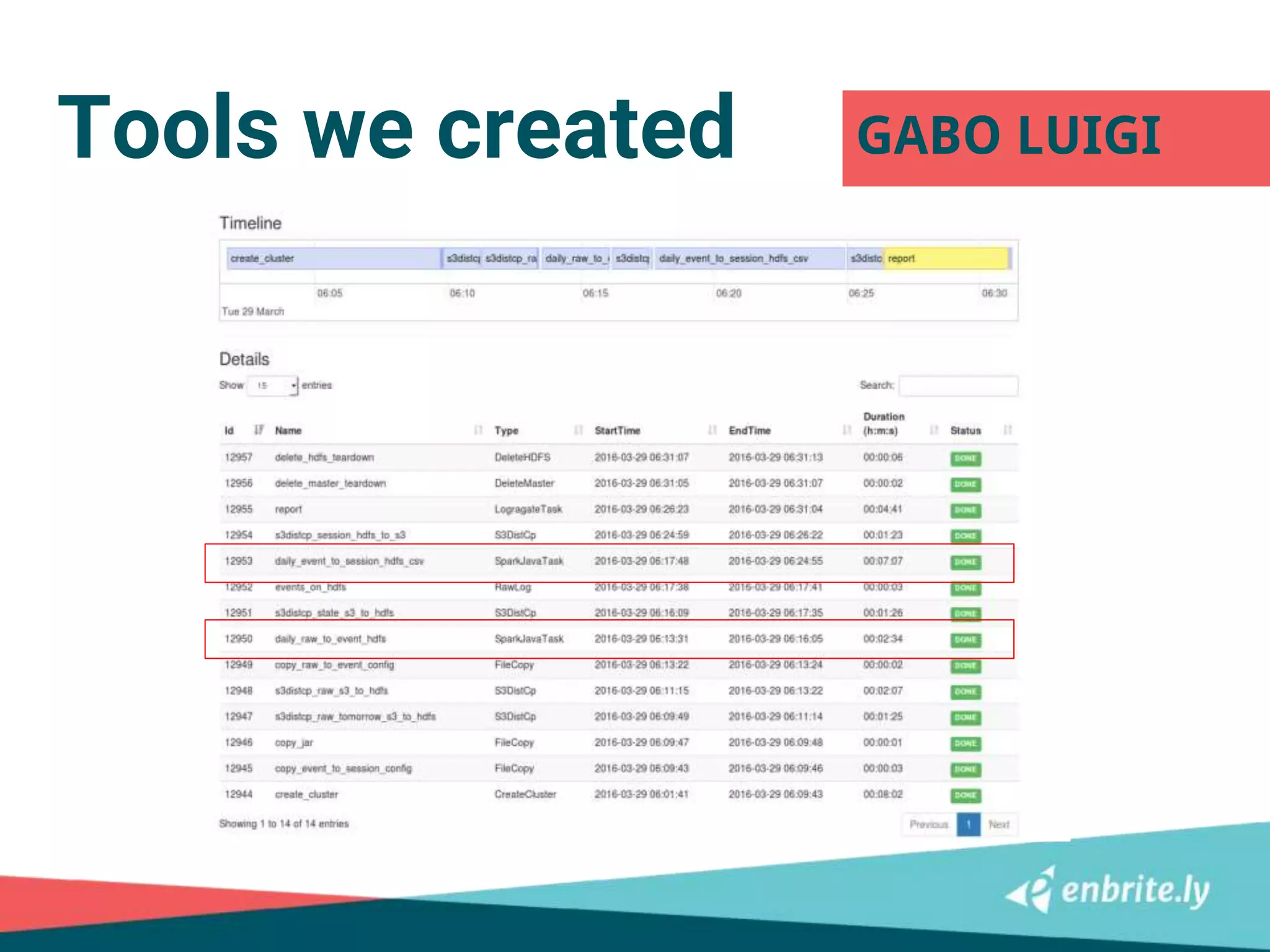

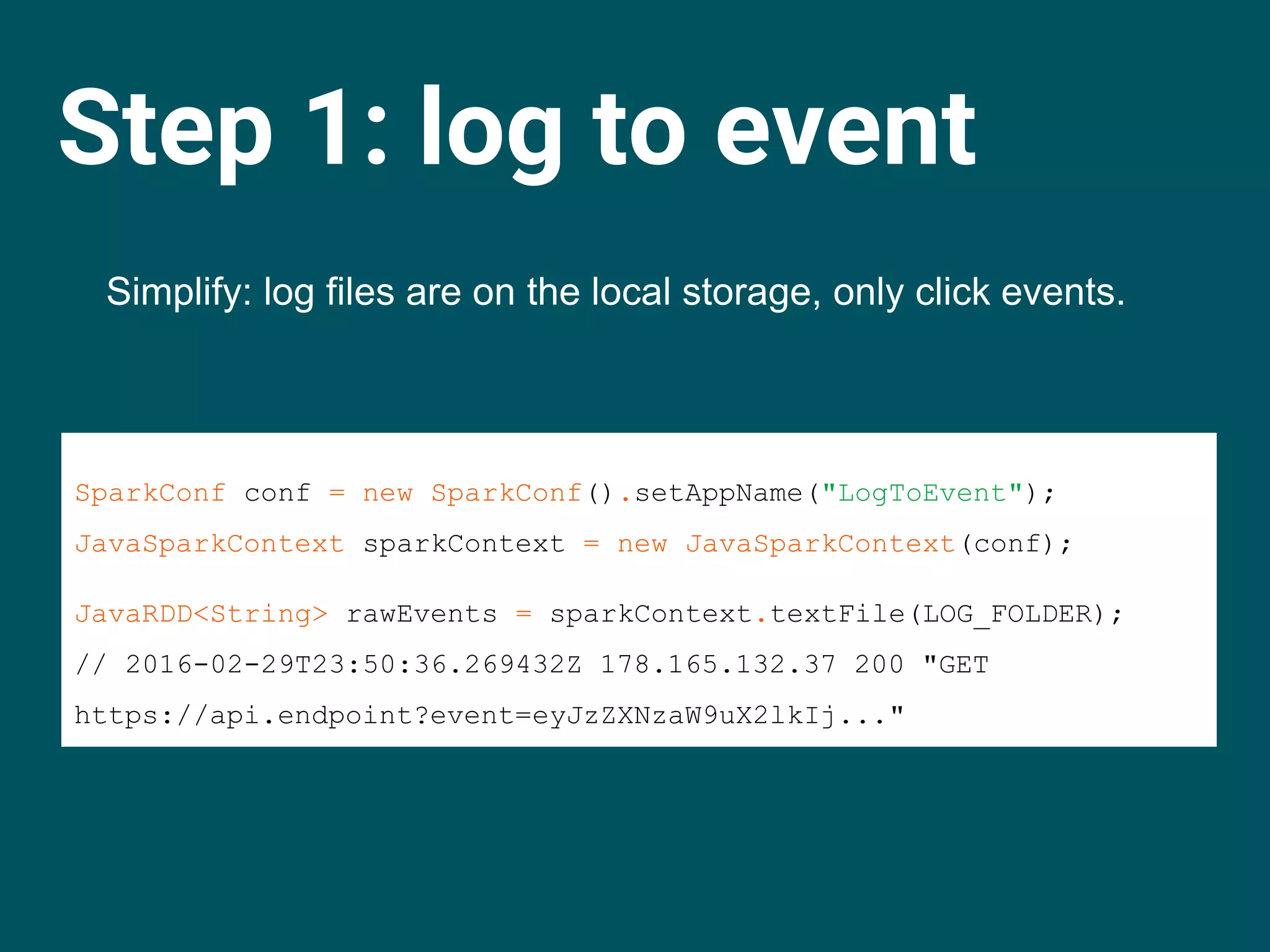

The document presents a talk by Joe Mészáros on revolutionizing online advertisement metrics using Enbrite.ly's antifraud, brand safety, and viewability products. It discusses the data collection and processing methods using Apache Spark on Amazon EMR, as well as real-world applications for detecting bot traffic in advertisements. The speaker emphasizes the importance of optimizing configurations, minimizing technical debt, and ensuring robust monitoring in distributed applications.

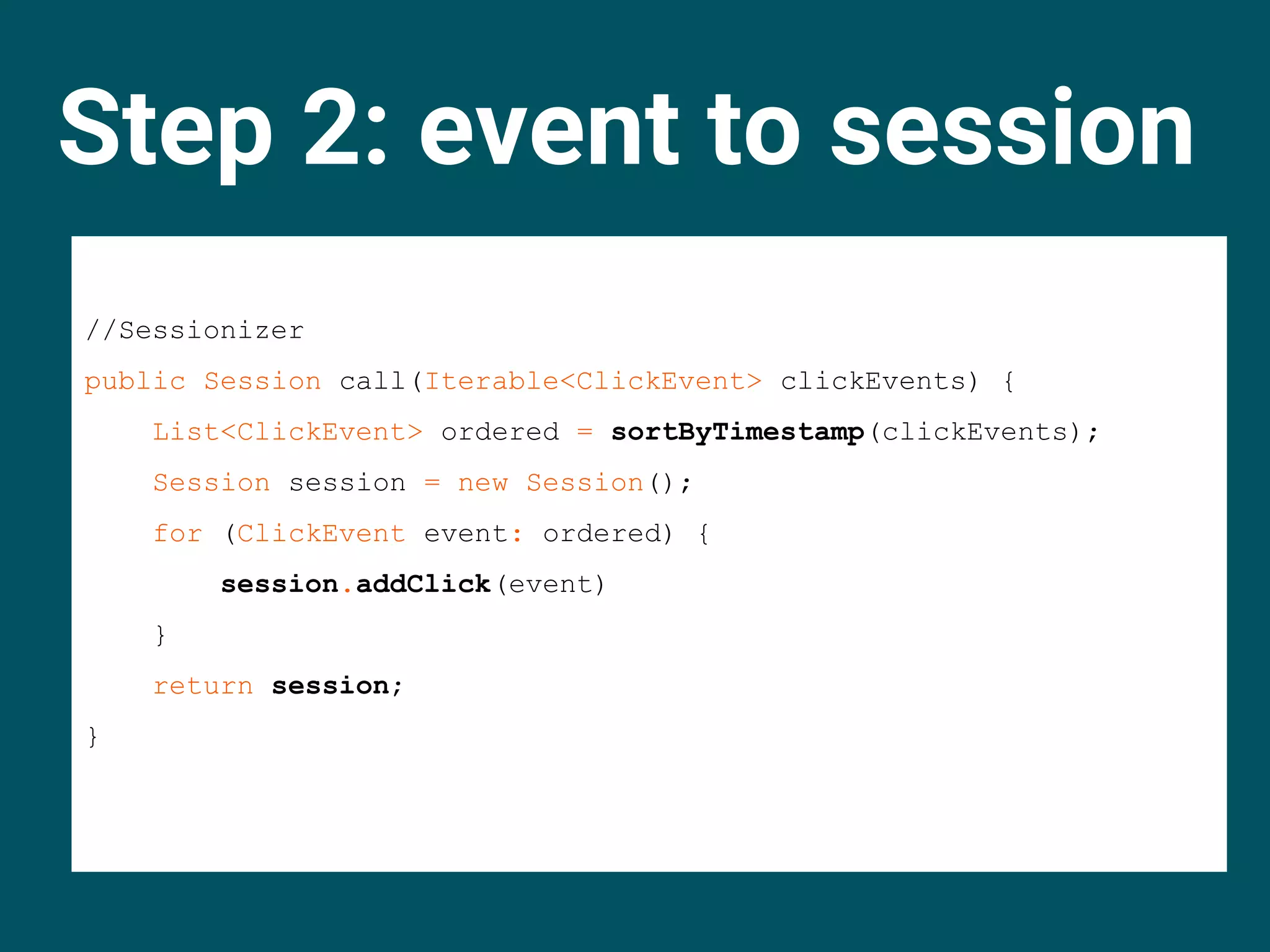

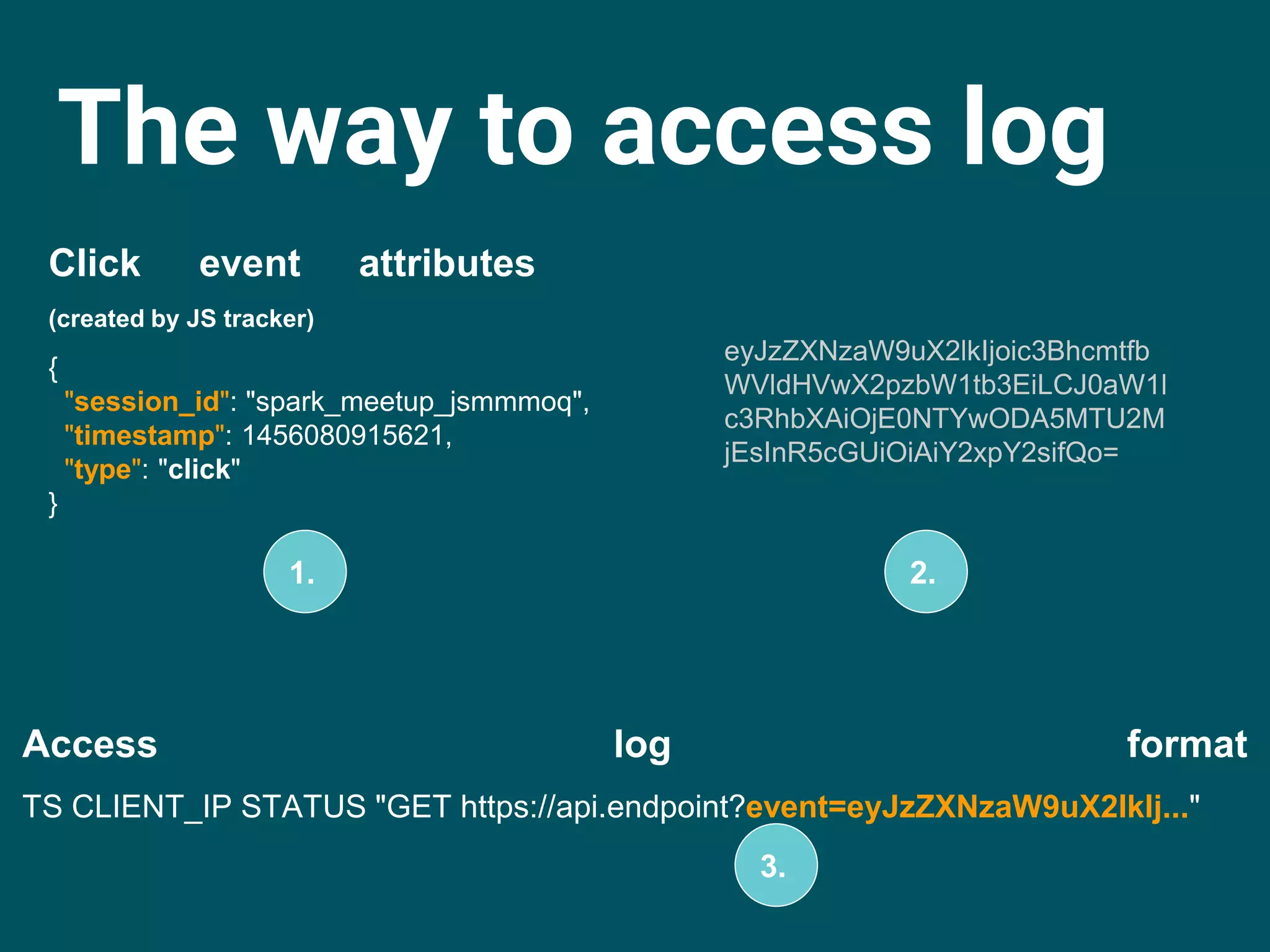

![Step 1: log to event

JavaRDD<String> rawUrls = rawEvents.map(l -> l.split("s+")[3]);

// GET https://api.endpoint?event=eyJzZXNzaW9uX2lkIj...

JavaRDD<String> rawUrls = rawEvents.map(l -> l.split("s+")[3]);

// GET https://api.endpoint?event=eyJzZXNzaW9uX2lkIj...

JavaRDD<String> eventParameter = rawUrls

.map(u -> parseUrl(u).get("event"));

// eyJzZXNzaW9uX2lkIj…

JavaRDD<String> rawUrls = rawEvents.map(l -> l.split("s+")[3]);

// GET https://api.endpoint?event=eyJzZXNzaW9uX2lkIj...

JavaRDD<String> eventParameter = rawUrls

.map(u -> parseUrl(u).get("event"));

// eyJzZXNzaW9uX2lk

JavaRDD<String> base64Decoded = eventParameter

.map(e -> new String(Base64.getDecoder().decode(e)));

// {"session_id": "spark_meetup_jsmmmoq",

// "timestamp": 1456080915621, "type": "click"}

IoUtil.saveAsJsonGzipped(base64Decoded);](https://image.slidesharecdn.com/apachesparkenbrite-160331144742/75/Budapest-Spark-Meetup-Apache-Spark-enbrite-ly-25-2048.jpg)